OCS Front-End Health: Logging and Monitoring

It goes without saying that monitoring is a best practice – be it a database or an application that sits at a level higher, any engineering group worth their salt will monitor for health.

But what constitutes health? For OCS R2, a variety of technologies meet with base OS and hardware to provide the functions of even simple IM and Presence. To that end, a fairly thorough combination of Monitoring (watching that certain metrics stay within predefined thresholds) and performance counter logging are required to both ensure maximum up-time and a conclusive investigation of root cause for service interruption.

Monitoring - OCS Front Ends and the SCOM Management Pack for R2

While it would be nice to have an out of the box, end-all be-all solution, that is not likely. That said, the Management Pack for R2 provides some baseline information that can inform you of the health of your FE and its sense of connectivity to the world around it – particularly the BE (SQL).

For example, with regard to connectivity to the SQL BE, a couple of key Front End metrics should be understood:

Queue Depth – Average number of database requests waiting to be executed

Queue Latency – Average time (msec) a request is held in the database queue

These two metrics speak to the dialogue between the OCS FE and the SQL BE. Queue Depth is a measurement of “how big the line is” for messages waiting to go to SQL, and the Latency metric tells us “how slow the line is” moving to SQL. Both must be watched because a “big line” that moves fast is indicative of different issues than a line (big or small) that’s not moving at all.

More importantly, if either of these reach a suboptimal level, users will begin to receive SIP 503 messages (OCS Server Not Available). As such, alerting for these metrics is highly valuable as the opportunity to catch a problem before it impacts the user community is dependent on your ability to quickly address the root cause of the excessive queuing (SQL Disk I/O, memory shortage, SQL blocking, network, etc.).

Logging – What’s not in the SCOM Management Pack

Determining root cause of an OCS outage is no minor feat, and with the SQL BE being a point of consideration the surface area for a root cause search is rather large.

Unfortunately, the OCS Management Pack is a bit slim on metrics enabled by default – and some are not even present that would absolutely be required for a correlation of data to, say, a SQL Diag report. This correlation of data would allow an engineer troubleshooting an outage to pinpoint the source of the event more accurately because both the FE and BE are being logged thoroughly.

A list of minimum performance counters for troubleshooting performance on an OCS R2 FE is below:

\AVMCU - 00 - Operations\*

\AVMCU - 01 - Planning\*

\AVMCU - 02 - Informational\*

\CAA - 00 - Operations\*

\CAA - 01 - Planning\*

\CAS - 00 - Planning\*

\CAS - 01 - Informational\*

\LC:Arch Agent - 00 - MSMQ\*

\LC:AsMcu - 00 - AsMcu Conferences\*

\LC:CCS - 00 - TPCP Processing\*

\LC:ClientVersionFilter - 00 - Client Version Filter\*

\LC:DATAMCU - 00 - DataMCU Conferences\*

\LC:DLX - 00 - Address Book and Distribution List Expansion\*

\LC:IIMFILTER - 00 - Intelligent IM Filter\*

\LC:ImMcu - 00 - IMMcu Conferences\*

\LC:LDM - 00 - LDM Memory\*

\LC:QMSAgent - 00 - QoEMonitoringServerAgent\*

\LC:RGS - 00 - Response Group Service Hosting\*

\LC:RGS - 01 - Response Group Service Match Making\*

\LC:RGS - 02 - Response Group Service Workflow\*

\LC:RoutingApps - 00 - UC Routing Applications\*

\LC:SIP - 01 - Peers(_Total)\*

\LC:SIP - 02 - Protocol\*

\LC:SIP - 03 - Requests\*

\LC:SIP - 04 - Responses\*

\LC:SIP - 05 - Routing\*

\LC:SIP - 07 - Load Management\*

\LC:SipEps - 00 - Sip Dialogs(_Total)\*

\LC:SipEps - 01 - SipEps Transactions(_Total)\*

\LC:SipEps - 02 - SipEps Connections(_Total)\*

\LC:SipEps - 03 - SipEps Incoming Messages(_Total)\*

\LC:SipEps - 04 - SipEps Outgoing Messages(_Total)\*

\LC:USrv - 00 - DBStore\*

\LC:USrv - 04 - Rich presence subscribe SQL calls\*

\LC:USrv - 05 - Rich presence service SQL calls\*

\MEDIA - 00 - Operations(*)\*

\MEDIA - 01 - Planning(*)\*

\MEDIA - 02 - Informational(*)\*

\Memory\*

\Network Interface(*)\*

\Process(ASMCUSvc)\*

\Process(ABServer)\*

\Process(AVMCUSvc)\*

\Process(DataMCUSvc)\*

\Process(IMMcuSvc)\*

\Process(QmsAgentSvc)\*

\Process(OcsAppServerHost)\*

\Process(OcsAppServerHost#1)\*

\Process(OcsAppServerHost#2)\*

\Process(OcsAppServerHost#3)\*

\Process(smlogsvc)\*

\Process(RtcHost)\*

\Process(RTCSrv)\*

\Processor(*)\*

\TCPv4\*

\UDPv4\*

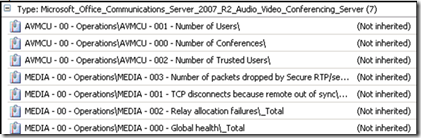

In the cases where there is an asterisk (‘*’) there is, within the SCOM MP, more than one counter in that subcategory. For example, for the single entry, \AVMCU - 00 - Operations\* , there are seven actual counters:

As such, this “list” is far more comprehensive than just the 60 or so metrics given above. In fact, the Management Pack has over 300 targets.

However, it is important to know that over 60 of the targets in the MP are not enabled by default – and another 18 targets/categories mentioned above are not included in the MP at all:

\LC:Arch Agent - 00 - MSMQ\*

\LC:AsMcu - 00 - AsMcu Conferences\*

\LC:CCS - 00 - TPCP Processing\*

\LC:ClientVersionFilter - 00 - Client Version Filter\*

IIMFILTER - 00 - Intelligent IM Filter

LDM - 00 - LDM Memory

RGS - 00 - Response Group Service Hosting

RGS - 02 - Response Group Service Workflow

LC:SIP - 03 – Requests

LC:SIP - 05 – Routing

\LC:SipEps - 00 - Sip Dialogs(_Total)\*

\LC:SipEps - 01 - SipEps Transactions(_Total)\*

\LC:SipEps - 02 - SipEps Connections(_Total)\*

\LC:SipEps - 03 - SipEps Incoming Messages(_Total)\*

\LC:SipEps - 04 - SipEps Outgoing Messages(_Total)\*

LC:USrv - 04 - Rich presence subscribe SQL calls

LC:USrv - 05 - Rich presence service SQL calls

MEDIA - 02 – Informational

In order to gather adequate data for determining the root cause of intermittent service interruption or any other challenge with OCS FE health, these counters must be added to a perf logging utility, be it SCOM or otherwise. Only then can the data collected be used in conjunction with SQL Diag and Perf data to isolate systemic failures.

Conclusion

OCS is a massive product with connectivity and dependencies to a variety of sources. To ensure maximum uptime and your best chance of conclusively troubleshooting an outage, it is recommended to go “above and beyond” standard metrics and – in some cases – the OCS R2 Management Pack for SCOM. Organizations with the flexibility may wish to have two levels of logging available: one that is consistent in normal performance, and one that is triggered during performance degradation.

______________________________________________

A Side Note about SQL

When there is a serious concern about OCS, contact a SQL support engineer for assistance in gathering a SQL Diag report and additional performance metrics there. Typically, this Diag report includes:

Processor - %Processor Time - _Total

Process - %Processor Time – sqlservr

PhysicalDisk - % Idle Time <All instances>

PhysicalDisk – Avg. Disk Queue Length <All instances>

…as well as metrics such as deadlock tracing (also capable of being captured by turning on traceflag 1204). For more on deadlock tracing, see: https://blogs.msdn.com/bartd/archive/2006/09/09/Deadlock-Troubleshooting_2C00_-Part-1.aspx.

Typically, it is best to get a DBA to fine tune the DIAG as the capturing of Profiler data can consume a great deal of storage and may need to be run separately from the rest of the DIAG.