POGO

Pogo aka PGO aka Profile Guided Optimization

My name is Lawrence Joel and I am a Software Developer Engineer in Testing working with the C/C++ Backend Compiler group. For today’s topic I want to blog about a pretty cool compiler optimization called Profile Guided Optimization (PGO or Pogo as we in the C/C++ team would like to call it). The tool is available for Microsoft Visual C/C++ 2005 and up. In this blog I will give a description of what PGO is, how it will improve your application and how to use it.

What is PGO?

PGO is an approach to optimization where the compiler uses profile information to make better optimization decisions for the program. Profiling is the process of gathering information of how the program is used during runtime. In a nutshell, PGO is optimizations based on user scenarios whereas static optimizations rely on the source file structure.

PGO has a three phase approach. The first phase can be known as the instrumental phase (see figure 1). With the instrumental phase, the linker takes the cil files (these are produced by the frontend compiler with /GL flag, eg. Cl.exe foo.cpp /GL) and passes the modules to the C/C++ Backend Compiler. The Backend Compiler will then inserts probe instructions wherever it is necessary. A .pgd file will be created with the executable; this is a database file that will be used in later phases. Note that the executable is bloated due to the probes.

Figure 1: Instrumentation Phase

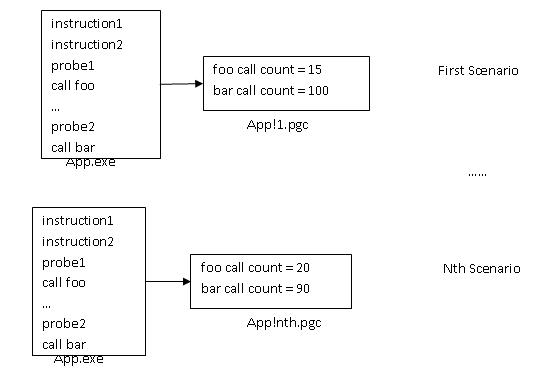

The second phase can be known as the training phase. This is where you run the executable under different scenarios. The probes will record runtime information and save the data to a .pgc file. After each run an appname!#.pgc file will be created (where appname is the name of the running application and # is 1 + the number of appname!#.pgc files in the directory). For example, with figure 2, for each scenario run of an executable the method call information is collected and recorded in the .pgc file. Note: to use PGO effectively you should make sure that your scenarios have good coverage over your application.

The third phase can be known as the PG Optimization phase (see Figure 3). With this phase the .pgc files are merged to the .pgd file which will be used by the C/C++ Backend Compiler to make better optimization decisions on the code and thus make a more efficient executable.

Figure 3: PG Optimization Phase

What advantages does Pogo provide?

Pogo optimizes the most commonly touched areas in a program. The compiler has a better idea as to what are the common inputs and control flow for the application. Here is a partial list of the optimizations that PGO provides:

· Inlining – By weighing method calls with the number of calls per execution, the compiler can make better inlining decisions.

· Virtual Call Speculation – If a particular derived type is often passed into a method then its override method can be inlined. This helps by limiting the number of calls to the vtable.

· Basic Block Reordering – This optimization finds the most executed paths and places the basic blocks of those paths spatially closer together. This helps in locality by optimizing instruction cache usage and branch prediction. Also, code that is not used during the training phases are moved to the bottom most section. Doing this together with “function layout” described below can significantly reduced the working set (number of pages used in one time interval) of sizeable applications.

· Size/Speed Optimization – With profile information, the compiler can find out the frequency of function usage. With this information the compiler can optimize for speed on the functions that are more frequently used and optimize for size on the functions that are less frequently used.

· Function Layout – Place functions in the same sections if they mainly used together based on the profile scenario.

· Conditional Branch Optimization – An example can be for if/else blocks. If the condition is more often false then true, it would be better to have the else block before the if block.

How to use PGO?

Here are the steps for a standard usage of PGO;

1. Compile the source code files that you want to be profiled with flag /GL.

2. Link all the files with /LTCG:PGINSTRUMENT (or /LTCG:PGI). This will create a .PGD file with your executable file. Note that when you link with /LTCG:PGI, some optimization may be overridden to make way for the instrumentation. Such optimizations are in effect if you specify /Ob, /Os or /Ot.

3. Train the application by running it with different scenarios.

4. Re-Link the files with /LTCG:PGOPTIMIZE (or /LTCG:PGO) to produce an optimized image of the application.

You might find yourself in a situation where you updated the source files after the .PGD file was created. If you were to re-link the object files with /LTCG:PGO then the profile information would be ignored. If you made small changes to the source file, it would be way too costly to repeat the process in creating a new .PGD file and .pgc files. To overcome this problem you can instead re-link the files with /LTCG:PGUPDATE (or /LTCG:PGU). This flag will allow the link to compile the new source code using the original .PGD file.

Another useful part of PGO is the ability to manage your .pgc files. Visual Studios provides a tool called Pgomgr which allows you to set priorities on the trained scenarios. For example, an ATM software company notices that the most common transactions performed on their software is withdraws and deposits. It would be in there best interest to set such transactions at a higher priority over the other transactions that are made on their software. This can be done by running the following: pgomgr /merge:2 appname!1.pgc appname.pgd, this will give appname!1.pgc a weight of 2. The default weight for a .pgc file is 1. When the files are re-linked with /ltcg:pgo or /ltcg:pgu then appname!1.pgc will have higher priority over the other .pgc scenarios.

If you want to only gather profile information within an interval of execution or time then there are a couple of ways to go about it. There is a tool called Pgosweep that interrupts a running program and stores the current profile information to a new .pgc file and clears the information from the runtime data structure. For example, if you have an application that does not end and you want to differentiate between its daytime behavior vs its nighttime behavior you can do the following: pgosweep app.exe daytime.pgc. Another approach you can use is a helper method called PgoAutoSweep. PgoAutoSweep will aid when trying to partition profile information within execution. The example below was taken from MSDN’s Walkthroughs in Visual C++ 2008, “Walkthrough: Using Profile-Guided Optimizations” (current link: http://msdn.microsoft.com/en-us/library/xct6db7f.aspx). The example below will create two .PGC files. The first contains data that describes the runtime behavior until count is equal to 3, and the second contains the data collected after this point until application termination.

|

#include <stdio.h> #include <windows.h> #include <pgobootrun.h>

int count = 10; int g = 0;

void func2(void) { printf(“hello from func2 %d\n”, count); Sleep(2000); }

void func1(void) { printf(“hello from func1 %d\n”, count); Sleep(2000); } void main(void) { while (count–) { if(g) func2(); else func1(); if (count == 3) { PgoAutoSweep(“func1”); g = 1; } } PgoAutoSweep(“func2”); } |

Note: To build the example I had to write cl app.cpp /GL “%VSPATH%\VC\lib\pgobootrun.lib”, where %VSPATH% is the path to your latest Microsoft Visual Studio program directory.

For more information on PGO please read Kang Su’s excellent article under MSDN’s Unmanaged C++ Articles titled “Profile-Guided Optimization with Microsoft Visual C++ 2005”. Current link: http://msdn.microsoft.com/en-us/library/aa289170.aspx. For information on PGO usage you can look at MSDN’s C/C++ Build Tools section “Profile-Guided Optimizations” page. Current link: http://msdn.microsoft.com/en-us/library/e7k32f4k.aspx .

Light

Light Dark

Dark

0 comments