Finding and pre-processing datasets for use with HDInsight

Free datasets

There are many difficult aspects associated with Big Data, getting a good, clean, well tagged dataset is the first barrier. After all, you can not really do much data processing without data! Many companies are yet to realize and discover the value of their data. For those of you who want to play with HDInsight, the good news is that there are plenty of free datasets on the internet, here are some examples:

The Million Song Dataset: A freely available collection of audio features and metadata for a million contemporary popular music tracks. You can get up to a few Terabytes worth. This is a very interesting dataset for a recommendation engine. I have a simple tutorial to walk you through on WindowsAzure.com athttps://www.windowsazure.com/en-us/manage/services/hdinsight/recommendation-engine-using-mahout/ Example

Freebase: A community-curated database of well-known people, places, and things https://www.freebase.com/ Freebase stores its data in a graph structure to describe relationships among different topics and objects. APIs are provided for running MQL queries against the graph dataset. Free base is very useful when it comes to data mash up: make looking up facts in the Freebase being part of your big data processing.

Data.Gov: One of my favorite places to find data is Data.gov, it contains datasets ranging from census, to earth quake, to economics data.

Wikipedia and its sub projects: https://en.wikipedia.org/wiki/Wikipedia:Database_download You can download the entire wikipedia if you wanted to. I am personally guilty of downloading 100s of gigabytes from wikipedia for parsing projects a few years ago.

These are just a few examples of free datasets you can get from the internet.

DataMarket: Microsoft has its own data market on Windows Azure, you can access them through Odata, I have written a hands on lab on how to get data into HDInsight here: https://github.com/WindowsAzure-TrainingKit/HOL-WindowsAzureHDInsight/blob/master/HOL.md Example

An example: Getting the Gutenberg free books dataset

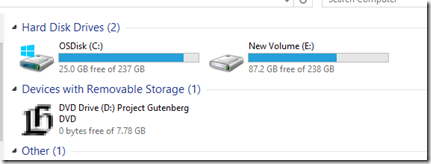

If we want to get a large dataset that’s well structured for a simple word count example, where would we go about finding such datasets? We might want to craw the web, or search our own computer for text documents, but that can be quiet involved. Luckily,Gutenberg.com has a huge collection of free books. The largest and latest dataset can be download, here’s the link to one of their DVD ISO images that contain much of the archive: ftp://snowy.arsc.alaska.edu/mirrors/gutenberg-iso I recommend the ISO image pgdvd042010.iso, it is about 8 GB. Once you download the ISO, take a look inside. If you have older versions of Windows OS, you might want to use winRAR to peak inside, windows 8 mounts it for you as a drive automatically.

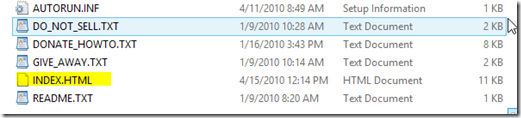

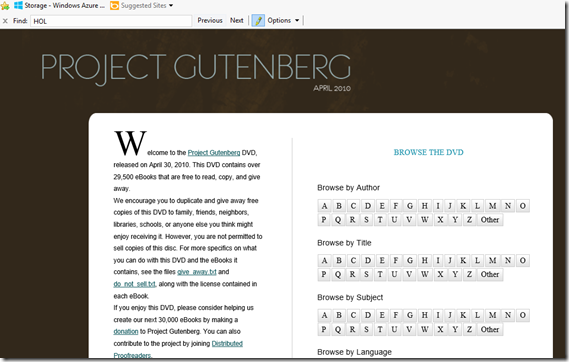

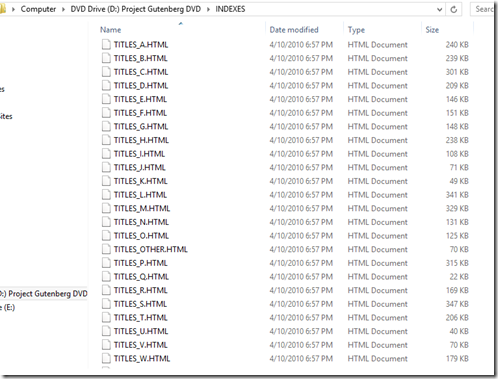

The ISO contains a web interface INDEX.HTML, and many books in various formats. Some books have images so they are in HTML or RTF formats, some are simply text without any illustrations. There are also books in different languages, and encoded in various formats. As you can see it becomes a big job to even prepare this modest dataset. In our case, we would like to get all the English text files, for that we will have to use the meta data from the index pages as much as we can. Click on the DVD and open the INDEX.HTML page by right click and Open With Internet explorer.

Now feel free to browse the index pages. You will soon discover that the pages actually contain good clue on if the book if English or not.

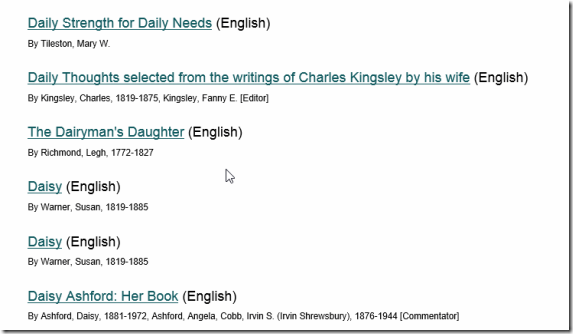

Click on Browse by title:

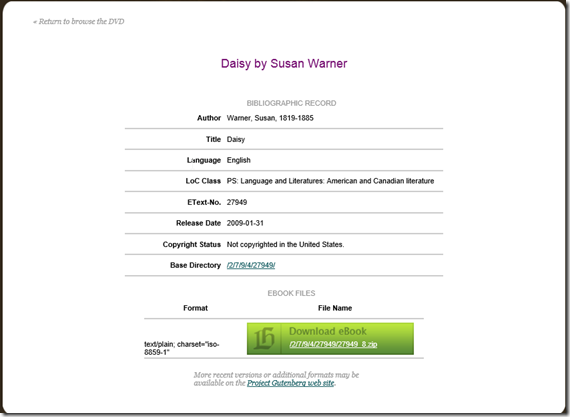

HTML Page for each individual files.

The best way to get all the English text is to write a simple crawler and copy all the English documents into a directory for further processing. The code is relatively straight forward, and I spent a couple of hours writing this in Python. You are welcome to use your favorite language. For those of you who are not familiar with the Python programming language, it is one of the most popular scripting languages for data processing. The Syntax is simple but may not be as intuitive to C, C# developers. Full source available on github: https://github.com/wenming/BigDataSamples/blob/master/gutenberg/gutenbergcrawl.py

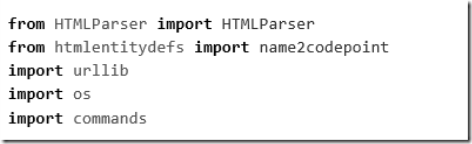

First section of the code:

We need an HTML Parser(HTMLParser, htmlentitydefs), a crawler or http client (urllib), and commands for copying files. Please note we are using Python 2.7, not 3.0. Python 2.7 is still the most popular at this time.

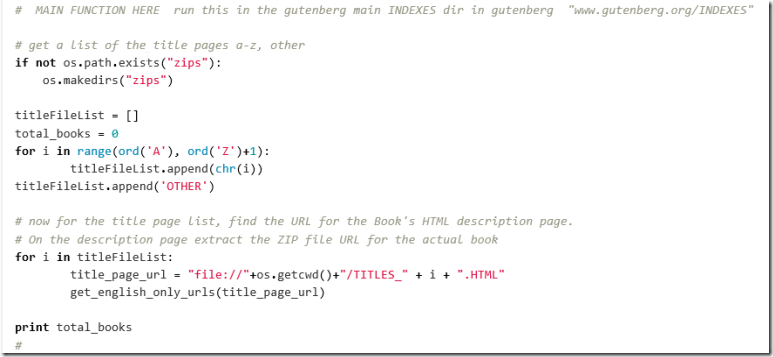

Let’s look at the main function first: make a directory called zips, this is where all the final ZIP files will go if they qualify as English text.

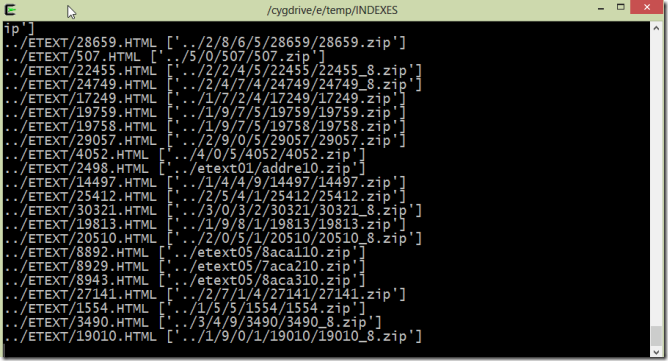

Then, get a list of title pages a-z, other. These HTML index pages reside in the INDEXES directory, for each of these files, we unleash our crawler by running the function called: get_english_only_urls(). The TITLES_*HTML pages contain information about each of the books and their language, and location of the book’s property html file that contains the actual book’s text file URL location.

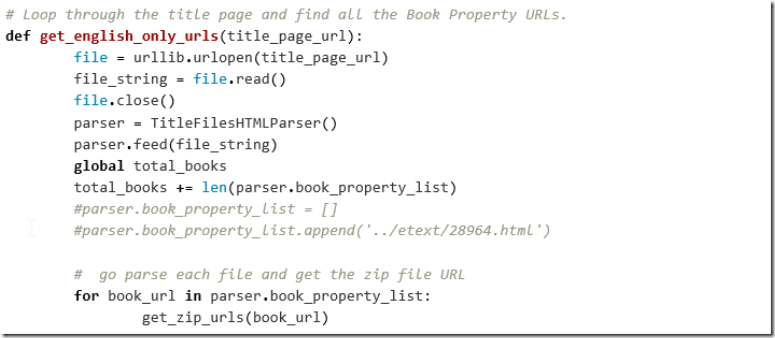

The next step is to open each of the TITILES*.HTML find the urls for each of the book’s HTML page, and then process them one by one. Notice TitleFilesHTMLParser is called here.

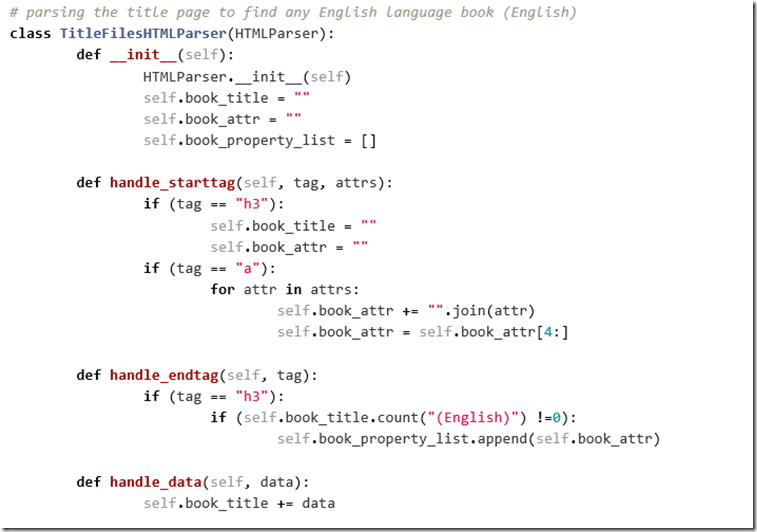

The title page parser is called, it only adds books with (English) tags next to it for processing.

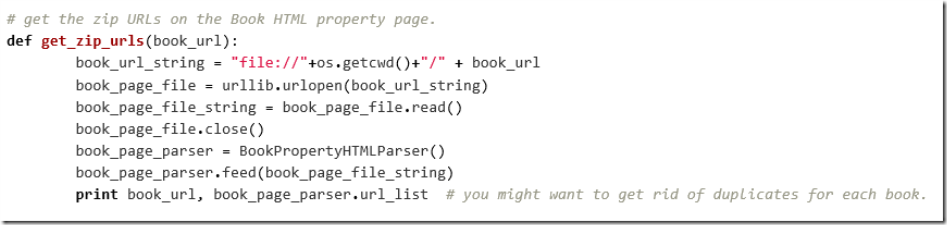

Now parse each HTML page for the individual books. Notice we are calling BookPropertyHTMLParser() on each of the HTML pages (per book).

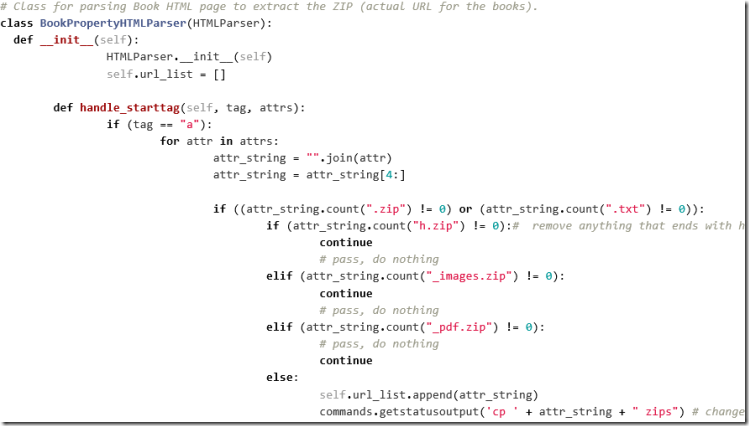

The BookPropertyHTMLParser simply finds the <a href=”…..*.zip> tags and copy the right zip files over the file to the zips directory. The code is a stub for future modification in case image, html, and pdf files need to be processed.

Running the sample:

Extract the ISO content into a temp directory, on Windows 8 you can simply control +A copy and paste into a temp directory. Other versions, you can use WinRAR for the extraction.

Install Cygwin: https://cygwin.org/ Make sure Python 2.7 is installed, also make sure Unzip is installed. It certainly helps to learn some basic Unix commands if you are dealing with lots of text files, and raw data files.

Get the code: https://raw.github.com/wenming/BigDataSamples/master/gutenberg/gutenbergcrawl.py, put the file in the root/INDEXES directory of Gutenberg files.

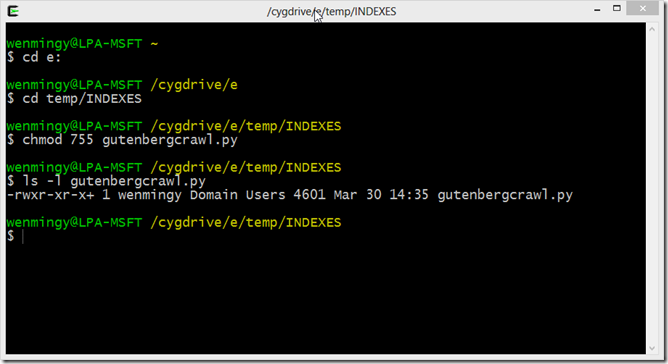

Open the Cygwin terminal and change directory to where you extracted your files, in my case I stored the data in E:\temp in Cygwin, you’ll see your drive in a special format: /cygdrive/e/temp/INDEXES (unix style directory).

cd e: to change Drive letters in the cygwin command window.

Another note is that your python script will need execute permission. In Unix shell, you can change it by setting the permission bits in the format of read,write, execute. (rwx). We wanted this file to be rwx, bits are set to be 111 for yourself, and r-x only 101 for group and world. Thus it is really: 111 (you/user) 101(group) 101(everyone). That translates into 755, thus the chmod 755 command.

Now, we are simply going to run the gutenbergcrawl.py by executing ./gutenbergcrawl.py Why the ./ in front? That is to specify that you want to run the command from the current directory. By default, bash shells don’t pick up the ./ directory for security reasons; you may accidentally run a script that you don’t intend to.

Conclusion

In this tutorial, we showed you how to find datasets on the internet for demos and examples. We went through a detailed example of pre-processing Gutenberg datasets. In this example, we used Cygwin, which is a Unix emulation environment for Windows, and we showed you some simple Python code for parsing HTML pages to extract meta data. This gives you a flavor of what data scientists do when it comes to getting and pre-processing datasets.

In later blogs, we will explore how we can use this dataset to run word count on HDInsight.