Windows HPC Pack 2012 released, HPC/Big Compute explained for Windows Azure developers

While most of you that have been using the Microsoft HPC Server product know the history and background of HPC, I still find that many Windows Azure developers new to this product. HPC, or high performance computing is a small (14 billion dollar) industry that has been around for 40 years even before desktops became popular, it is best described as building super computers with raw computing power to solve the largest computational problem possible. To do so, the most recent practice is to cluster a number of compute nodes together to build a “compute cluster”. The world’s largest unclassified super computers are reported on the Top 500 list, have 100s of thousands of nodes connected together using specialized interconnects many times faster than gigabit Ethernet. These machines are used to help perform numerical simulations on nuclear physics, life sciences, chemistry, weather prediction, automotive crashworthiness and many more. As computing power became cheaper, large clusters are now being utilized by industries to perform a variety of tasks. Some of the most popular applications include 3D raytracing, digital movie production, financial portfolio evaluation/trading, oil reservoir exploration, and more. A more popular term, Big Compute has emerged to describe the industry that tries to solve large computational problems through large clusters. The fact that public cloud can conveniently provide large number of machines almost instantly on demand is likely to propel the industry further by making compute clusters more accessible to many more companies and individuals.

One of the most frequently asked question I get about HPC is how it differs from big data. The simplest answer is that big data tries to solve data analysis problems by using a cluster of machines to store and analyze an amount of data that can not be handled by a single machine. Big data, however, relies more on disk IO performance rather than pure CPU compute power. A typical HPC node may have GPUs and expensive CPUs with many cores to perform raw computation, while a common big data compute node may have a lot of RAM, and two dozen disks. Of course, many clusters choose to configure for both IO and CPU power. The other important aspect of Big Compute and Big data is a batch processing system. This system, often called a scheduler, understands the resources the cluster has, and schedule long running tasks on a cluster. A batch system can often have multiple users submitting many long running jobs that uses different amount of resources. The scheduler’s job is to make sure that the cluster is utilized with maximum efficiency based on priorities, policies, and usage patterns. Windows HPC Pack provides a scheduler that can perform sophisticated scheduling for Big Compute utilizing Windows 8, Server, Windows Azure compute nodes that it manages.

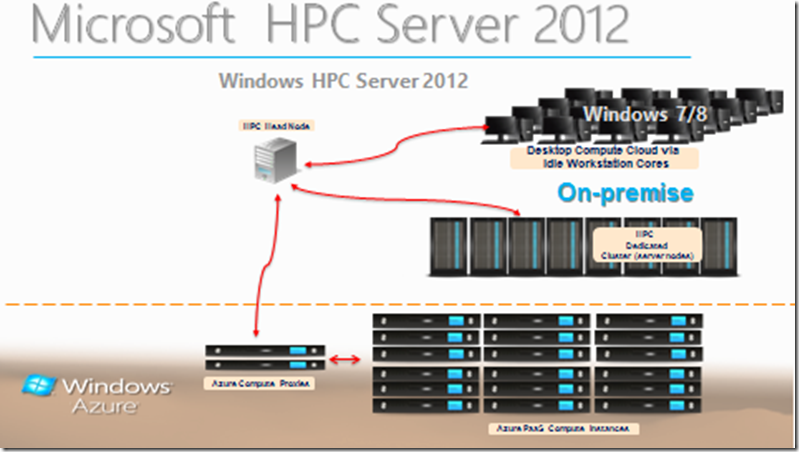

Our HPC team just announced the release of HPC Pack 2012. Unlike previous releases, there is no longer a HPC SKU for Windows Server 2012. Instead, we are releasing this as a free add-on pack. It is just as powerful as previous Windows HPC Servers, and you can use it to control not only server nodes, but also Windows 8 workstation nodes, as well as Windows Azure nodes (Azure burst). You can download all the related packages from this page: https://technet.microsoft.com/library/jj899572.aspx#BKMK_download

The idea of HPC Server burst into the cloud is that if you simply wanted to run computation of existing compute intensive code on multiple compute nodes, you can package them up and run on Windows Azure nodes. There’s little if any programming required. By installing the HPC Pack, you get the cool Azure burst feature for free.

The new release allows you to have several options for your deployment, I’ll illustrate a few.

- Deploy the head node on-premise, and control on-premise Windows Server nodes, and/or Windows 8 workstation nodes.

- Deploy the head node on-premise, and control Azure PaaS nodes.

- Deploy the head node as a IaaS VM in Windows Azure and control Azure PaaS nodes in Windows Azure.

If you would like to learn more about the industry, you can read my previous post: Super computing 2012 and new Windows Azure HPC Hardware Announcement. Another post you should read is: Windows Azure Benchmarks Show Top Performance for Big Compute by Bill Hilf. Bill details how our upcoming Hardware for Big Compute on Windows Azure performs. The new HPC hardware offers beefy 120 GB ram and infiniband for super fast 40gbps interconnect between the compute nodes.

In the next series of blogs, I’ll give a tutorial on deploying and running HPC applications. We’ll start by walking you through the deployment of an HPC cluster entirely on Windows Azure with “head node” burst, or option 3 listed above.

-

- Create a Windows Server 2012 IaaS VM.

- Install HPC Pack.

- Prepare and deploy Azure PaaS nodes.

Windows HPC Server 2012 can manage and control Workstations, Server nodes, as well as Windows Azure Compute nodes.