Consuming SQL Server Data Service aka “Dallas” Service in SSIS

Lets assume a scenario…You are a BI guy of your team and your company has just started working on a business case where there is a requirement for a non-proprietary data. For example assume that your company is making an asset management system which wants to utilizes the ETL process to gather the weather data to make business decisions.

Some of the other scenarios may involve the use of...

- Energy Statistics

- Greenhouse Gas (GHG) Inventory Data

- Industrial Commodity Statistics

- core health statistics for the WHO Member States.

One place where you can get this data is from SQL Data Services (SDS). This service provides a lot of benefits for developers as as:

○ Trial subscriptions for premium content.

○ Discover and license valuable data.

○ Consistent REST based APIs for all datasets.

○ Easy to consume various contents.

○ Automatic C# proxy classes providing instant object models.

○ consume third party data inside your LOB applications

Well, if you know that the Data you are looking for is available on the cloud and if you also know that you should be using SSIS as a crucial ETL tool to …now what?

I was recently trying to utilize some of the RESTFul services offered in Dallas in SSIS and found that though we don’t have a built in Task that provide capability of consuming a Restful Dallas service off the cloud, it’s pretty easy to get around this situation.

Now you need to keep in mind that the service exposed in SQL Data Services (SDS) are RESTFul in nature. This blog will not discuss in detail about the consuming RESTFul services. However, for those who are new to this term, REST stands for REPRESENTATIONAL STATE TRANSFER.

This is one of the several(SOAP, XML-RPC) ways of exposing your Service/Data Service.

Keeping implementation in mind, methodology of creating RESTful service is that:

1. Application state and functionality is divided into resources.

2. Every resource is uniquely addressable using a universal syntax.

General Flow of consuming a RESTFul service

public void Main()

{

Uri address = new Uri("https://search.yahooapis.com/WebSearchService/V1

/webSearch?appid=YahooDemo&query=rest");

HttpWebRequest request = (HttpWebRequest)WebRequest.Create(address);

try

{

XDocument xml = null;

using (HttpWebResponse response = (HttpWebResponse)request.GetResponse())

{

using(StreamReader reader=new StreamReader(response.GetResponseStream()))

{

xml = XDocument.Parse(reader.ReadToEnd());

}

}

}

catch (Exception ex)

{

throw ex.InnerException;

}

Dts.TaskResult = (int)ScriptResults.Success;

}

//Then write a XML Processing routine or an XSLT transformation to extract the

//information.

//Complexity grows as soon as your requirement for consuming more Data Sets. You

// might have to

//write more XML processing instructions or, more XSLT as you consume more services.

}

Obviously, the later part requires a lot more of work than quick off the shelf solution

which SQL Data Services provides.

SQL Data Services for RESCUE…..

Let me show you how it's so easy to consume a SQL Data Service. This is made possible as each Service provider provides it's Proxy

Class which you can use in your projects. This is one of the biggest offerings for SDS and definitely made to save a lot of development time.

General Flow of consuming a RESTFul “Dallas” service

1. Provide account information to authenticate request.

2. Instantiate Proxy class . Which (Constructor) checks for the paging and sets the URI

3. Invoke the service via proxy

4. Get the data back in strongly typed IEnumerable format, against which you can write neat LINQ queries.

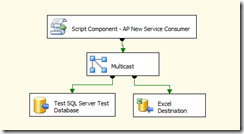

The final DEMO solution uses a Script Component in DataFlow Task.

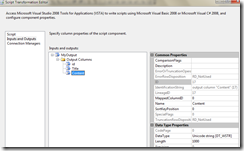

Lets go ahead and design the Script Component. Before you can consume the required Data Service you need the proxy class which

you can download from the Service Subscription page.

Add the class file to your solution

Note: Some of these classes uses .Net Framework 3.5 libraries. In that case you need to make sure that project is set for the correct

Target Framework. So that you can resolve the Framework Library namespaces.

In my solution, I am trying to consume AP Data Service for the news Feed and I am interested in three columns: Id, Title and Content

(exposed by AP Data Service via NewsCategoriesService class).

Once I am receiving the required data, I would like to push it down to other components such as SQL Server Destination or Excel

destination. Hence, I have added three columns in Input and Output section of the Script Component.

Now lets override the PreExecute() to consume the AP News Service to get News Categories and CreateNewOutputRows() to push the

available rows to other tasks further down the line of your ETL solution.

public override void PreExecute()

{

base.PreExecute();

string accountKey = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX";

System.Guid uniqueUserID = newGuid("XXXXXXX-XXXX-XXXX-XXXX-XXXX");

NewsCategoriesService myNewsCategoriesService = newMicrosoft.Dallas.Services.NewsCategoriesService(accountKey, uniqueUserID);

myNewsItems = myNewsCategoriesService.Invoke();

}

public override void CreateNewOutputRows()

{

foreach (var item in myNewsItems)

{

MyOutputBuffer.AddRow();

MyOutputBuffer.id = item.Id;

MyOutputBuffer.Title = item.Title;

MyOutputBuffer.Content = item.Content;

}

}

There you go!! you got yourself your TIMESAVING-ETL Solution and a lot of accolades for saving your boss’s day.

~Jay