Understanding and Using OData – OData Publication (2 of 4)

This article is part of a series:

- Understanding and Using OData (1 of 4)

- OData Publication (2 of 4) – This article

- Analysing OData Feeds (3 of 4)

- Creating a Windows Phone 7 OData Client Application (4 of 4)

Hopefully these articles will show you how to produce and publish an OData Feed, Create relationships between feeds from different sources, analyse OData and finally how to develop a custom OData client. This should give a really good appreciation of some of the possible uses for OData.

This article discusses publication of the required data sets as OData services. In order to do this, I decided to:

- Import the data into a local SQL Server database (using SSIS)

- Copy the data to SQL Azure (using BCP)

- Publish an OData endpoint (using SQL Azure Labs)

Importing to Local SQL Server

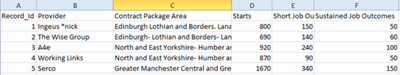

I chose to import a public dataset published to data.gov.uk by the Department for Work and Pensions relating to the New Deal programme:

Firstly, I prepared the data for import, by making it into a simple CSV format, looking out for and then replacing problematic characters such as quotes and commas in strings. I also added an Id column to the data, which was just a sequential number (this is only necessary if your data doesn’t have a natural primary key):

Next I used the SQL Server Import and Export Wizard This is not a step by step guide to using the import and export wizard (for more details on the wizard, click here). Access the wizard from the SQL Server folder in the start menu or the tasks menu in SQL Server Management Studio:

I chose the file I wished to import, and then looked at the column definitions:

Once my input data format was fully defined, I moved on to define the output:

And I also saved the definition as an SSIS package:

This is useful in creating a fully functioning SSIS package to automate the transfer.

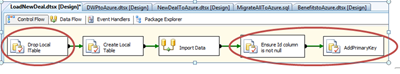

I then opened Business Intelligence Development Studio (BIDS) to complete development of the SSIS Package. I opened the packaged produced by the wizard and added a couple of things:

The Create Local Table and Import Data tasks were created by the wizard (I have renamed them here).

I also added tasks (highlighted in the diagram) to:

- Drop the table first, to enable the package to be re-run to refresh the data automatically.

- Ensure that the id column in the CSV file is not null, and also to set the Id column as the primary key (all tables must have a primary key to before being published as an OData endpoint).

Now, I can make changes to my CSV file and re-run my SSIS package to get a refreshed table in my local SQL Server database.

Copying the Data to SQL Azure

The next step is to extend the SSIS package created above to copy the data for publication to SQL Azure. In this article, I will assume you already have a SQL Azure account and know how to use the Azure Management Portal.

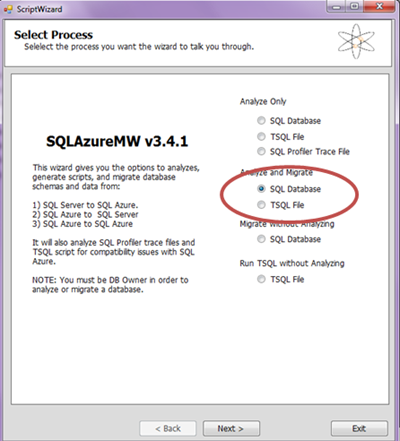

The SQL Azure Migration Wizard helps you migrate your local SQL Server 2005 / 2008 databases into SQL Azure. I downloaded the tool, unzipped it and executed it:

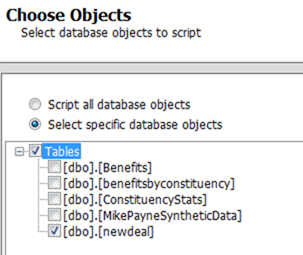

I connected to the local database where I imported the CSV data and selected the table I wanted to transfer to SQL Azure:

The wizard will do two things:

- Generate a SQL script to create the table(s) on SQL Azure

- Extract the data (using BCP) ready from import into SQL Azure after the table has been created

I saved the SQL Script locally in the BIDS project directory:

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[newdeal]') AND type in (N'U'))

BEGIN

CREATE TABLE [dbo].[newdeal]

(

[Record_Id] [numeric](18, 0) NOT NULL,

[Provider] [varchar](50) COLLATE Latin1_General_CI_AS NULL,

[Contract Package Area] [text] COLLATE Latin1_General_CI_AS NULL,

[Starts ] [numeric](18, 0) NULL,

[Short Job Outcomes] [numeric](18, 0) NULL,

[Sustained Job Outcomes] [numeric](18, 0) NULL,

PRIMARY KEY CLUSTERED

(

[Record_Id] ASC

)

)

END

GO

-- BCPArgs:23:[dbo].[newdeal] in "c:\SQLAzureMW\BCPData\dbo.newdeal.dat" -E -n -b 10000

Note that the wizard has exported the data ready for import into SQL Azure.

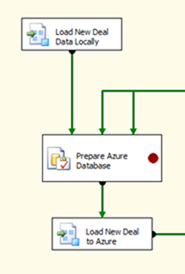

The next step was to use the assets generated by the SQL Azure Migration Wizard to extend the SSIS package developed above:

The original package is names “Load New Deal Data Locally” and after that package has completed I execute a new task “Prepare Azure Database”. This task simply executes the SQL script generated by the SQL Azure Migration Wizard. (Note that the script only creates the tables if they don’t exist. I chose to leave it like this, and add code to truncate the tables, which means the script can run on demand to refresh the data. If the data format changes I will need to drop the tables manually from SQL Azure.)

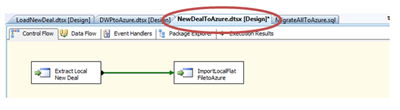

My final task is “Load New deal to Azure”. This task executes another package called “New Deal to Azure.dtsx”. The tasks in this package are shown below:

This package contains two tasks, both based on the Bulk Copy (BCP) output generated by the SQL Azure Migration Wizard.

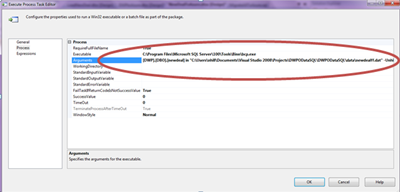

Firstly, the data is extracted from the local database in the Extract Local New Deal Task:

This task runs a Win32 executable (BCP) with the correct parameters:

BCP [DWP].[DBO].[newdeal] out "C:\Users\nhill\Documents\Visual Studio 2008\ Projects\ DWPODataSQL\DWPODataSQL\data\newdealff.dat" -T -S. -E -n -b 5000

Where:

- Out = Export Data

- -T = Trusted Authentication

- -S. = Server (. Is localhost, default instance)

- -E = Keep Identity Values

- -n = native type

- -b = batch size (5000)

Then, the data is imported to SQL Azure:

[DWP].[DBO].[newdeal]

in "C:\Users\nhill\Documents\Visual Studio 2008\Projects\DWPODataSQL

\DWPODataSQL\data\newdealff.dat" -Unhill@frq6joxf0e -PPassword

-Sfrq6joxf0e.database.windows.net -E -n -b 5000

Where:

- In = Import Data

- -U = SQL Azure User (user@host)

- -P = password

- -S. = Server (SQL Azure database server)

The SSIS Package will now automatically process the source CSV file and update an equivalent table on SQL Azure.

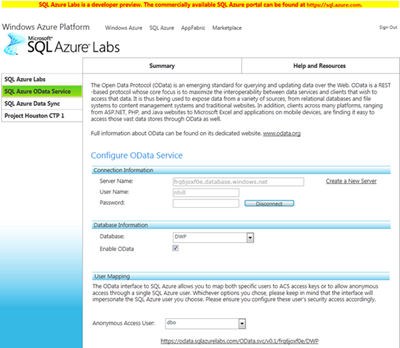

Publishing an OData Endpoint

The easiest way to publish an OData endpoint from your SQL Azure database is using SQL Azure Labs. After logging in with your Live Id, you are presented with a series of preview features. Select the OData Service tab:

Using this service to configure an OData service is a simple matter. Specify the SQL Azure Server name, user id and password. Select the SQL Azure database and select the check box to enable OData. Select a user (dbo) to enable anonymous access.

This will then generate an OData endpoint through which to access your OData Service. In this case:

https://odata.sqlazurelabs.com/OData.svc/v0.1/frq6joxf0e/DWP

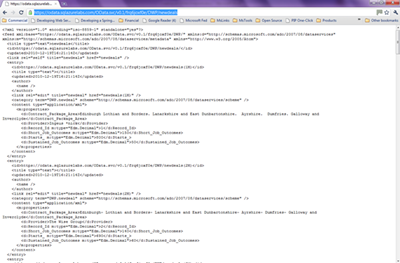

Accessing the url will provide a list of Entities exposed by the OData service:

Accessing the newdeals service will display the data:

Different browsers will render the OData feed differently, Chrome will return the raw XML (see above), while Firefox and IE 9 will return an RSS Feed (and you may need to ‘view source’ to see the data.

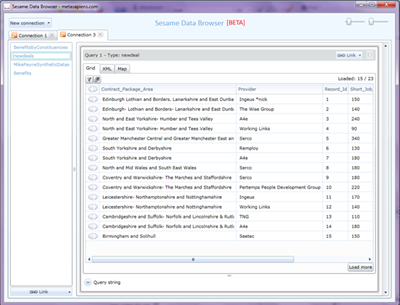

In order to test your feed, probably the best approach is to use OData Explorer, LINQPad, or Sesame. The following snip shows the OData feed in Sesame:

You may need to run the Silverlight applications locally to connect to the service.

I wanted to access my OData feed using Tableau, but the Tableau software sends an empty authentication header, even when trying to access a service anonymously. This caused the SQL Azure Labs OData service to try to resolve an SWT Token (unsuccessfully) which meant that Tableau could not access my feed. Thanks to Mike Pizzo for updating the SQL Azure Labs service to ‘work around’ this and Tableau will now happily work with a Labs Feed.

However, before Mike made his work around, I tried an alternative approach: Creating a custom WCF Data service. This is incredibly easy to do, and provides a much finer grained control of the published service. You can read about this here.

In the next part of this article, I will discuss analysing the data from my OData feeds using Microsoft PowerPivot and Tableau.

Written by Nick Hill