IIS Media Services: Live Smooth Streaming, Fault Tolerance and Architecture

A frequent question that I received is about suggested architecture for live Smooth Streaming and fault tolerance during a live streaming.

With this post I would try to explain in a simple way some details about Live Smooth Streaming and summarize architecture and suggestions for deliver robust Live Streaming with IIS Media Services (IIS MS).

One of the most significant differences about Smooth Streaming respect to past technologies for delivering real time streaming is about the way that is used for delivering content to player client.

In classic streaming like RTSP\RTMPE\WMS etc , client open a permanent connection to the streaming server that is responsible to control streaming and that provide to push the video to the client and manage buffers . Server receive control command to the client like play, pause etc and react at this command and push the content to the client. This way to deliver streaming have a lot of limits and lack in terms of scalability because is required a permanent connection with a specific server and costs, because is required a specialized server for deliver video content.

In order to offer a more scalable solution at lower costs Smooth Streaming leverage pull model using standards HTTP get to give access to the client also for live streaming , that could permit to use a simple HTTP cache server for distribute video content on Internet . In this model, client drive the streaming and ask for content to any http cache server involved in streaming distribution without any affinity or dependency. This permit to increase dramatically scalability and reducing costs for this type of service. In Smooth Streaming video content is segmented in a sequence of video chunk with a specific duration that the client download with a sequence of HTTP get and use these to create a buffer and starts the video decode and play on the client.

In live smooth streaming client starts reading via HTTP get a manifest for streaming . In this manifest client have a description of available bitrates\quality for live streaming, url that is necessary call to download content, actual status of live streaming with the list of the video and audio chunks available and the time code that drive the streaming and the duration of every chunk. For example below you can see a manifest for a live streaming with ten minutes of DVR :

<?xml version="1.0"?>

<SmoothStreamingMedia MajorVersion="2" MinorVersion="0" Duration="0" TimeScale="10000000" IsLive="TRUE" LookAheadFragmentCount="2" DVRWindowLength="6000000000" CanSeek="TRUE" CanPause="TRUE">

<StreamIndex Type="video" Name="video" Subtype="WVC1" Chunks="0" TimeScale="10000000" Url="QualityLevels({bitrate})/Fragments(video={start time})"><QualityLevel Index="0" Bitrate="600000" CodecPrivateData="250000010FC3920CF0778A0CF81DE8085081124F5B8D400000010E5A47F840" FourCC="WVC1" MaxWidth="416" MaxHeight="240"/><QualityLevel Index="1" Bitrate="1450000" CodecPrivateData="250000010FCBAC19F0EF8A19F83BE8085081AC3FEE9FC00000010E5A47F840" FourCC="WVC1" MaxWidth="832" MaxHeight="480"/><QualityLevel Index="2" Bitrate="350000" CodecPrivateData="250000010FC38A09B0578A09B815E80850808AADEACF400000010E5A47F840" FourCC="WVC1" MaxWidth="312" MaxHeight="176"/><QualityLevel Index="3" Bitrate="475000" CodecPrivateData="250000010FC38E09B0578A09B815E80850810E7E487A400000010E5A47F840" FourCC="WVC1" MaxWidth="312" MaxHeight="176"/><QualityLevel Index="4" Bitrate="1050000" CodecPrivateData="250000010FCBA01210A78A121829E8085081A00AD01B400000010E5A47F840" FourCC="WVC1" MaxWidth="580" MaxHeight="336"/>

<c t="0" d="20000000"/><c d="20000000"/>

<c d="20000000"/>

<c d="20000000"/>

<c d="20000000"/>

<c d="20000000"/>

</StreamIndex>

<StreamIndex Type="audio" Name="audio" Subtype="WmaPro" Chunks="0" TimeScale="10000000" Url="QualityLevels({bitrate})/Fragments(audio={start time})">

<QualityLevel Index="0" Bitrate="64000" CodecPrivateData="1000030000000000000000000000E00042C0" FourCC="" AudioTag="354" Channels="2" SamplingRate="44100" BitsPerSample="16" PacketSize="1487"/>

<c t="0" d="20897959"/>

<c d="19969161"/>

<c d="19388662"/>

<c d="20897959"/>

<c d="19620862"/>

<c d="20897959"/>

</StreamIndex>

</SmoothStreamingMedia>

You can see the audio and video track , the levels of bitrates available for the client , the DVR window length of the content , the attribute IsLive that explain to the client the fact that it is a live streaming, the lists of the chunks available for download. With the t parameter in the chunks you can find the time code and with d parameter the duration of every chunk. With this information the client can calculate actual position of live streaming and starts to pull chunks in order to create the buffer for a correct reproduction, using a sequence of HTTP GET request to one of the HTTP cache server involved in the streaming. Buffer dimensions in the client could be determined with a specific parameter in player configuration.

You can see below the structure of the HTTP get that the client use for video chunks:

https:// { service uri} /QualityLevels( {bitrate} )/Fragments(video= {start time} )">

and for audio chunks:

https:// { service uri} /QualityLevels( {bitrate} )/Fragments(video= {start time} )">

As you can see client send an HTTP GET request for every audio and video chunk and specify in the request bitrates required and start time code for the video fragment.

This is a sample request for a video chunk at 350k for 60000000 position time code:

https://localhost/test.isml/QualityLevels(350000)/Fragments(video=60000000)

and this is a sample for an audio chunk for 60000000 position time code 10980716553:

https://localhost/test.isml/QualityLevels(64000)/Fragments(audio=10980716553)

The client selects the bitrates that requests to the server and adjusts it continuously during the streaming in base to the real bandwidth available for the download and the capability of the client to decode the quality level of the video.

Time code for every request is calculated starting from the information in the manifest and using a local clock in order to establish the frequency of the requests for the new time code aligned with the buffer in the client.

As you can understand from the previous description, time code play a fundamental role in this type of streaming .

In order to go in deep on the architecture required for achieve a robust live streaming with fault tolerance we start to describe the role that IIS MS play in the architecture.

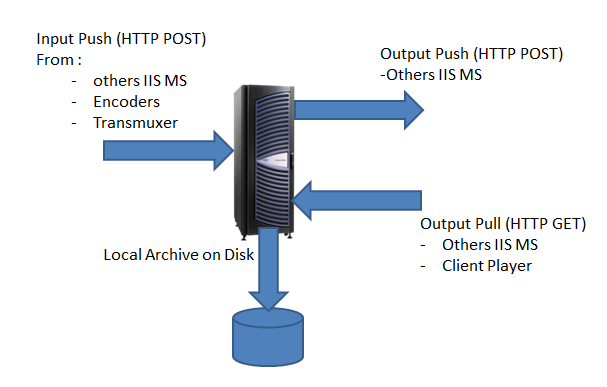

Every IIS MS can receive video fragment from an encoder or from another IIS MS and can offer this to a client or to another IIS MS or to an HTTP cache server.

IIS MS can receive or transmit content in push or in pull from the encoder or from another IIS MS and can ingest locally an archive of the content in live streaming. Starting from IIS MS 4.0 you could enabled also trans-mux of content in Http Adaptive Streaming for Apple devices like I described in this post on this topic.

In the picture below you could see the possible combination for input output video fragment to IIS MS:

For the input in push IIS MS accept long running HTTP POST to a specific rest URL of the publishing point configured for live streaming and append a stream id after the url of the pubpoint in order to identify the stream in a unique way. For example for a pub point that have this url:

https://localhost/test.isml

We have this type of url used for push the content using a long running HTTP POST:

https://localhost/test.isml/streams({streamid})

The "streamID" value uniquely identifies an incoming fragmented stream which is transmitted over a HTTP POST request. One fragmented MP4 stream could contain multiple tracks (e.g. video and audio). In Live Smooth Streaming, typically there are multiple fragmented MP4 streams coming from the encoder or encoders to the server.

Encoders or others IIS MS use this approach to push video fragment for live streaming to a server. This is the typical configuration used for encoder connection. IIS MS ingest the content and based on the configuration of the publishing point can:

-archive it on local disk

- push the content to other servers

- offer it in pull to other servers or to client player.

IIS MS admit to receive content in input with more HTTP POSTS, in the case of failure in a long running HTTP POST , IIS MS wait that the source of the Input reconnect in order to continue to push video fragments. In the case that the streaming is finished encoder can send a specific EOS signal to inform IIS MS that the streaming is ended.

In the case that IIS MS receive an EOS the publishing point remain in Stopped state but with DVR active for client. If the encoder reconnect after EOS streaming doesn't restart. You could use a specific entry in the .isml file to instruct IIS MS to reset the publishing point when an encoder reconnects after an EOS. This is the TAG that you can insert in the .isml files that configure the pubpoint:

<meta name="restartOnEncoderReconnect" content="TRUE" />

In order to achieve fault tolerance IIS MS can accept in input more stream with the same streamID on the same publishing point. This can permit to push with 2 encoder video fragments on the same pubpoint . IIS MS select only one fragments with the same time code and discard the others. In this way you can use 2 encoders in parallel with aligned time code in order to have fault tolerance. Time code play a fundamental role for video fragments in Input, and IIS MS use the time code to discriminate video fragments in input with the same StreamiID. For this reason pay attention to the fact that if you have 1 encoder active on a pubpoint and you have a fault on the encoder and the long running HTTP POST stopped, IIS MS wait that the encoder reconnect and it is fundamental that when the encoder reconnect use a coherent time code. IIS MS restart streaming only when start to receive video fragment with a time code > of the last time code ingested . For this reason is fundamental that you have a time code in your video fragment, if your encoder restart the time code from zero or with a time code in the past respect to the last ingested in IIS MS streaming can't restart since time code is in the future from the last known time code ingested.

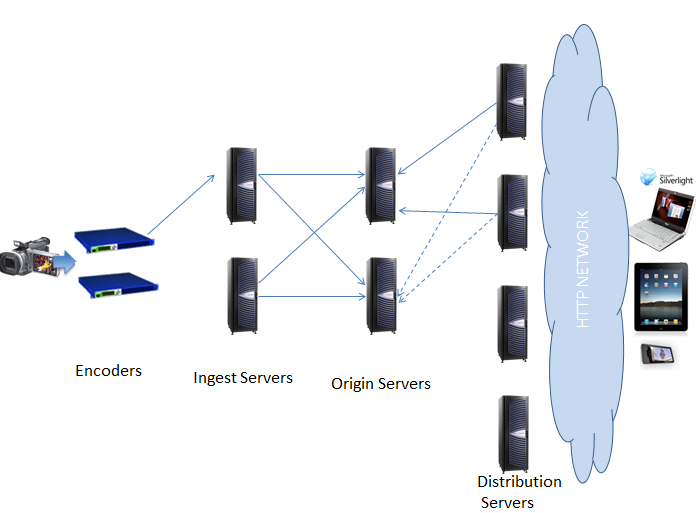

The figure below describe the suggested architecture for a robust live streaming delivery :

As you can see we have a different layers with a different role:

- - Encoders

- - Ingest Servers

- - Origin Servers

- - Distribution Servers

Encoders:

Encoders take the video signal, encode it in Smooth Streaming format . The encoder use a long running HTTP POST to push video fragment to the ingest server.

In order to achieve redundancy you could use two different model :

- - 1 active encoder and 1 not active encoder that could be used as backup in the case of failure of the first encoder (called N+M scenario): The backup can be shared with more of 1 active streaming and managed with the software management solution of the encoders in order to start when one of the active encoder fail. This is a less expensive scenario, also in this case is necessary that when the backup encoder starts after the fault of the first active encoder, restart to push video fragments with the same streamID and with a time code in the future from the last known time code sent to the ingest server from the previous faulted encoder. In this case we could have a short interruption in the streaming that you could manage from a client player, managing the exception and start to try to reconnect to the server automatically.

- - 1 + 1 Encoders active : in this scenario we have 2 active encoders that push the same video framents to the same ingest server. This is a configuration suggested for mission critical streaming . Encoders can push at the same time the signal to two ingest servers in order to offer a complete redundancy. It is required that both encoders be timecode aligned using a common source clock, such as embedded SMPTE timecode (VITC) on the source signal. As we have described before ingest servers discard duplicated fragments and in the case of fault of one of the encoder, streaming continue to work without interruption.

Ingest Servers:

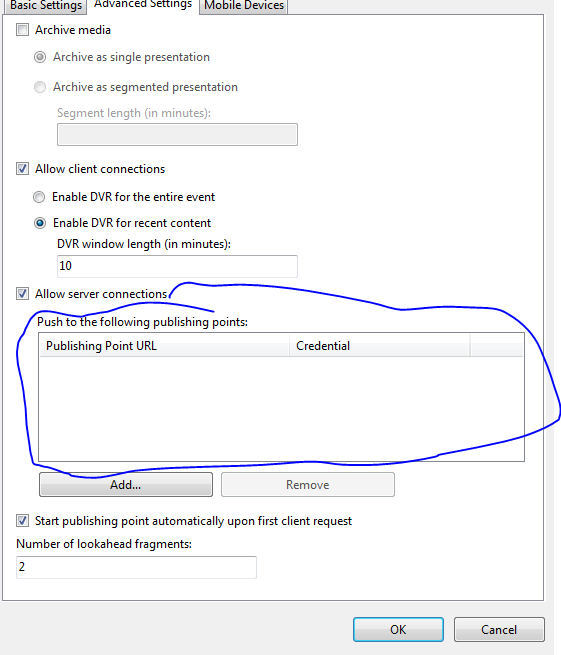

Ingest Servers have the role to receive content from the encoder and push it to origin servers (in general at minimum 2 origin servers). Typically Ingest Servers don't have the archive active and push content to 2 origin servers and manage reconnection in the case of network fault.

You can configure on IIS MS the possibility to push from the ingest servers to the oring servers with the specific tab.

The ingest Server push the content to all the servers that you insert in the configuration.

In the case of fault in one of the ingest servers we have a situation similar to the encoders fault . In the case that the encoders push on the 2 ingest servers we don't have any interruption in the other case management software of encoders detect the failure and start the backup encoder that starts to push on the second ingest server and restart the streaming. In this case we could have a short interruption that can be managed at client player level as described before.

Origin Servers:

The origin servers have the role to hold all the information about the live stream, they archive content and can directly serve client requests or distribution servers. We have multiple origin servers that run simultaneously in order to have redundancy and scalability. On origin servers can be drive a transmuxing to convert content to other formats. For example IIS MS 4.0 can drive a transmuxing in HTTP Live Streaming for Apple Devices. It can also be the source for distribution servers and synchronize past information with them if needed. In the case of fault in a origin server, additional origin servers can immediately serve as backups because they are all running and have the same data. Distribution servers know where to re-request and retry the stream from a backup in the case of origin server failure. To serve client requests, either directly or indirectly through a generic HTTP network that contains HTTP cache/proxy/edge servers, they must have archive and client output options configured.

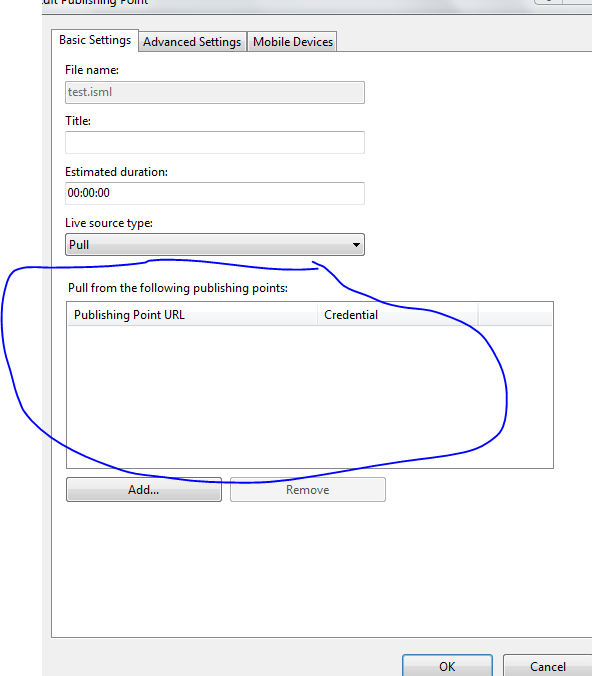

Distribution Servers:

Distribution servers are used to scale out a network by offloading archiving and client request serving from the origin server tier. Distribution servers use the pull input stream option and are configured with multiple origin server URLs for redundancy. Like origin servers, we have multiple distribution servers to have scalability and redundancy. Is it possible use IIS MS configured in pull that point to all origins server in combination with IIS Application Request Routing (ARR). You can configure with the specific tab a publishing point in IIS MS to pull content from origins and configure a list of the origin where the distribution server can pull.

In the case of failure in one of the origin, the distribution server start to pull to the next in the list. Pay attention to the fact that the distributore switch to next server only in the case of origin server failure (machine down, netowrk error, etc.) and not in the case of encoder fault.

For more information about live streaming I would suggest to you to read these two fantastic posts from Sam Zhang (Developer Lead in IIS Media Services Team for live streaming) about live Smooth Streaming, the first about design principle of live Smooth Streaming, the second about the suggested architecture for delivering real-time streaming on IIS MS.

If you want learn more about configurations for Live Streaming with Smooth Streaming , you can use this resources on iis.net: