Framework for Composing and Submitting .Net Hadoop MapReduce Jobs

An updated version of this post can be found at:

If you have been following my blog you will see that I have been putting together samples for writing .Net Hadoop MapReduce jobs; using Hadoop Streaming. However one thing that became apparent is that the samples could be reconstructed in a composable framework to enable one to submit .Net based MapReduce jobs whilst only writing Mappers and Reducers types.

To this end I have put together a framework that allows one to submit MapReduce jobs using the following command line syntax:

MSDN.Hadoop.Submission.Console.exe -input "mobile/data/debug/sampledata.txt" -output "mobile/querytimes/debug"

-mapper "MSDN.Hadoop.MapReduceFSharp.MobilePhoneQueryMapper, MSDN.Hadoop.MapReduceFSharp"

-reducer "MSDN.Hadoop.MapReduceFSharp.MobilePhoneQueryReducer, MSDN.Hadoop.MapReduceFSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduce\bin\Release\MSDN.Hadoop.MapReduceFSharp.dll"

Where the mapper and reducer parameters are .Net types that derive from a base Map and Reduce abstract classes. The input, output, and files options are analogous to the standard Hadoop streaming submissions. The mapper and reducer options (more on a combiner option later) allow one to define a .Net type derived from the appropriate abstract base classes.

Under the covers standard Hadoop Streaming is being used, where controlling executables are used to handle the StdIn and StdOut operations and activating the required .Net types. The “file” parameter is required to specify the DLL for the .Net type to be loaded at runtime, in addition to any other required files.

As an aside the framework and base classes are all written in F#; with sample Mappers and Reducers, and abstract base classes being provided both in C# and F#. The code is based off the F# Streaming samples in my previous blog posts. I will cover more of the semantics of the code in a later post, but I wanted to provide some usage samples of the code.

As always the source can be downloaded from:

https://code.msdn.microsoft.com/Framework-for-Composing-af656ef7

Mapper and Reducer Base Classes

The following definitions outline the abstract base classes from which one needs to derive.

C# Base

- [AbstractClass]

- public abstract class MapReduceBase

- {

- protected MapReduceBase();

- public override IEnumerable<Tuple<string, object>> Cleanup();

- public override void Setup();

- }

C# Base Mapper

- public abstract class MapperBaseText : MapReduceBase

- {

- protected MapperBaseText();

- public override IEnumerable<Tuple<string, object>> Cleanup();

- public abstract override IEnumerable<Tuple<string, object>> Map(string value);

- }

C# Base Reducer

- [AbstractClass]

- public abstract class ReducerBase : MapReduceBase

- {

- protected ReducerBase();

- public abstract override IEnumerable<Tuple<string, object>> Reduce(string key, IEnumerable<string> values);

- }

F# Base

- [<AbstractClass>]

- type MapReduceBase() =

- abstract member Setup: unit -> unit

- default this.Setup() = ()

- abstract member Cleanup: unit -> IEnumerable<string * obj>

- default this.Cleanup() = Seq.empty

F# Base Mapper

- [<AbstractClass>]

- type MapperBaseText() =

- inherit MapReduceBase()

- abstract member Map: string -> IEnumerable<string * obj>

- abstract member Cleanup: unit -> IEnumerable<string * obj>

- default this.Cleanup() = Seq.empty

F# Base Reducer

- [<AbstractClass>]

- type ReducerBase() =

- inherit MapReduceBase()

- abstract member Reduce: key:string -> values:IEnumerable<string> -> IEnumerable<string * obj>

The objective in defining these base classes was to not only support creating .Net Mapper and Reducers but also to provide a means for Setup and Cleanup operations to support In-Place Mapper optimizations, utilize IEnumerable and sequences for publishing data from the Mappers and Reducers, and finally provide a simple submission mechanism analogous to submitting Java based jobs.

For each class a Setup function is provided to allow one to perform tasks related to the instantiation of each Mapper and/or Reducer. The Mapper’s Map and Cleanup functions return an IEnumerable consisting of tuples with a a Key/Value pair. It is these tuples that represent the mappers output. Currently the types of the key and value’s are respectively a String and an Object. These are then converted to strings for the streaming output.

The Reducer takes in an IEnumerable of the Object String representations, created by the Mapper output, and reduces this into a Object value enumerable. Once again the Cleanup allows for return values to allow for In-Reducer optimizations.

Combiners

The support for Combiners is provided through one of two means. As is often the case, support is provided so one can reuse a Reducer as a Combiner. In addition explicit support is provided for a Combiner using the following abstract class definition:

C# Base Combiner

- public class MobilePhoneMinCombiner : CombinerBase

- {

- public override IEnumerable<Tuple<string, object>> Combine(string key, IEnumerable<string> value)

- {

- yield return new Tuple<string, object>(key, value.Select(timespan => TimeSpan.Parse(timespan)).Min());

- }

- }

F# Base Combiner

- [<AbstractClass>]

- type CombinerBase() =

- inherit MapReduceBase()

- abstract member Combine: key:string -> values:IEnumerable<string> -> IEnumerable<string * obj>

Using a Combiner follows exactly the same pattern for using mappers and reducers, as example being:

-combiner "MSDN.Hadoop.MapReduceCSharp.MobilePhoneMinCombiner, MSDN.Hadoop.MapReduceCSharp"

The prototype for the Combiner is essentially the same as that of the Reducer except the function called for each row of data is Combine, rather than Reduce.

Binary and XML Processing

In my previous posts on Hadoop Streaming I provided samples that allowed one to perform Binary and XML based Mappers. The composable framework also provides support for submitting jobs that support Binary and XML based Mappers. To support this the following additional abstract classes have been defined:

C# Base Binary Mapper

- [AbstractClass]

- public abstract class MapperBaseBinary : MapReduceBase

- {

- protected MapperBaseBinary();

- public abstract override IEnumerable<Tuple<string, object>> Map(string filename, Stream document);

- }

C# Base XML Mapper

- [AbstractClass]

- public abstract class MapperBaseXml : MapReduceBase

- {

- protected MapperBaseXml();

- public abstract override IEnumerable<Tuple<string, object>> Map(XElement element);

- }

F# Base Binary Mapper

- [<AbstractClass>]

- type MapperBaseBinary() =

- inherit MapReduceBase()

- abstract member Map: filename:string -> document:Stream -> IEnumerable<string * obj>

F# Base XML Mapper

- [<AbstractClass>]

- type MapperBaseXml() =

- inherit MapReduceBase()

- abstract member Map: element:XElement -> IEnumerable<string * obj>

To support using Mappers and Reducers derived from these types a “format” submission parameter is required. Supported values being Text, Binary, and XML; the default value being “Text”.

To submit a binary streaming job one just has to use a Mapper derived from the MapperBaseBinary abstract class and use the binary format specification:

-format Binary

In this case the input into the Mapper will be a Stream object that represents a complete binary document instance.

To submit an XML streaming job one just has to use a Mapper derived from the MapperBaseXml abstract class and use the XML format specification, along with a node to be processed within the XML documents:

-format XML –nodename Node

In this case the input into the Mapper will be an XElement node derived from the XML document based on the nodename parameter.

Samples

To demonstrate the submission framework here are some sample Mappers and Reducers with the corresponding command line submissions:

C# Mobile Phone Range (with In-Mapper optimization)

- namespace MSDN.Hadoop.MapReduceCSharp

- {

- public class MobilePhoneRangeMapper : MapperBaseText

- {

- private Dictionary<string, Tuple<TimeSpan, TimeSpan>> ranges;

- private Tuple<string, TimeSpan> GetLineValue(string value)

- {

- try

- {

- string[] splits = value.Split('\t');

- string devicePlatform = splits[3];

- TimeSpan queryTime = TimeSpan.Parse(splits[1]);

- return new Tuple<string, TimeSpan>(devicePlatform, queryTime);

- }

- catch (Exception)

- {

- return null;

- }

- }

- /// <summary>

- /// Define a Dictionary to hold the (Min, Max) tuple for each device platform.

- /// </summary>

- public override void Setup()

- {

- this.ranges = new Dictionary<string, Tuple<TimeSpan, TimeSpan>>();

- }

- /// <summary>

- /// Build the Dictionary of the (Min, Max) tuple for each device platform.

- /// </summary>

- public override IEnumerable<Tuple<string, object>> Map(string value)

- {

- var range = GetLineValue(value);

- if (range != null)

- {

- if (ranges.ContainsKey(range.Item1))

- {

- var original = ranges[range.Item1];

- if (range.Item2 < original.Item1)

- {

- // Update Min amount

- ranges[range.Item1] = new Tuple<TimeSpan, TimeSpan>(range.Item2, original.Item2);

- }

- if (range.Item2 > original.Item2)

- {

- //Update Max amount

- ranges[range.Item1] = new Tuple<TimeSpan, TimeSpan>(original.Item1, range.Item2);

- }

- }

- else

- {

- ranges.Add(range.Item1, new Tuple<TimeSpan, TimeSpan>(range.Item2, range.Item2));

- }

- }

- return Enumerable.Empty<Tuple<string, object>>();

- }

- /// <summary>

- /// Return the Dictionary of the Min and Max values for each device platform.

- /// </summary>

- public override IEnumerable<Tuple<string, object>> Cleanup()

- {

- foreach (var range in ranges)

- {

- yield return new Tuple<string, object>(range.Key, range.Value.Item1);

- yield return new Tuple<string, object>(range.Key, range.Value.Item2);

- }

- }

- }

- public class MobilePhoneRangeReducer : ReducerBase

- {

- public override IEnumerable<Tuple<string, object>> Reduce(string key, IEnumerable<string> value)

- {

- var baseRange = new Tuple<TimeSpan, TimeSpan>(TimeSpan.MaxValue, TimeSpan.MinValue);

- var rangeValue = value.Select(stringspan => TimeSpan.Parse(stringspan)).Aggregate(baseRange, (accSpan, timespan) =>

- new Tuple<TimeSpan, TimeSpan>((timespan < accSpan.Item1) ? timespan : accSpan.Item1, (timespan > accSpan.Item2) ? timespan : accSpan.Item2));

- yield return new Tuple<string, object>(key, rangeValue);

- }

- }

- }

MSDN.Hadoop.Submission.Console.exe -input "mobilecsharp/data" -output "mobilecsharp/querytimes"

-mapper "MSDN.Hadoop.MapReduceCSharp.MobilePhoneRangeMapper, MSDN.Hadoop.MapReduceCSharp"

-reducer "MSDN.Hadoop.MapReduceCSharp.MobilePhoneRangeReducer, MSDN.Hadoop.MapReduceCSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceCSharp\bin\Release\MSDN.Hadoop.MapReduceCSharp.dll"

C# Mobile Min (with Mapper, Combiner, Reducer)

- namespace MSDN.Hadoop.MapReduceCSharp

- {

- public class MobilePhoneMinMapper : MapperBaseText

- {

- private Tuple<string, object> GetLineValue(string value)

- {

- try

- {

- string[] splits = value.Split('\t');

- string devicePlatform = splits[3];

- TimeSpan queryTime = TimeSpan.Parse(splits[1]);

- return new Tuple<string, object>(devicePlatform, queryTime);

- }

- catch (Exception)

- {

- return null;

- }

- }

- public override IEnumerable<Tuple<string, object>> Map(string value)

- {

- var returnVal = GetLineValue(value);

- if (returnVal != null) yield return returnVal;

- }

- }

- public class MobilePhoneMinCombiner : CombinerBase

- {

- public override IEnumerable<Tuple<string, object>> Combine(string key, IEnumerable<string> value)

- {

- yield return new Tuple<string, object>(key, value.Select(timespan => TimeSpan.Parse(timespan)).Min());

- }

- }

- public class MobilePhoneMinReducer : ReducerBase

- {

- public override IEnumerable<Tuple<string, object>> Reduce(string key, IEnumerable<string> value)

- {

- yield return new Tuple<string, object>(key, value.Select(timespan => TimeSpan.Parse(timespan)).Min());

- }

- }

- }

MSDN.Hadoop.Submission.Console.exe -input "mobilecsharp/data" -output "mobilecsharp/querytimes"

-mapper "MSDN.Hadoop.MapReduceCSharp.MobilePhoneMinMapper, MSDN.Hadoop.MapReduceCSharp"

-reducer "MSDN.Hadoop.MapReduceCSharp.MobilePhoneMinReducer, MSDN.Hadoop.MapReduceCSharp"

-combiner "MSDN.Hadoop.MapReduceCSharp.MobilePhoneMinCombiner, MSDN.Hadoop.MapReduceCSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceCSharp\bin\Release\MSDN.Hadoop.MapReduceCSharp.dll"

F# Mobile Phone Query

- namespace MSDN.Hadoop.MapReduceFSharp

- open System

- open MSDN.Hadoop.MapReduceBase

- // Extracts the QueryTime for each Platform Device

- type MobilePhoneQueryMapper() =

- inherit MapperBaseText()

- // Performs the split into key/value

- let splitInput (value:string) =

- try

- let splits = value.Split('\t')

- let devicePlatform = splits.[3]

- let queryTime = TimeSpan.Parse(splits.[1])

- Some(devicePlatform, box queryTime)

- with

- | :? System.ArgumentException -> None

- // Map the data from input name/value to output name/value

- override self.Map (value:string) =

- seq {

- let result = splitInput value

- if result.IsSome then

- yield result.Value

- }

- // Calculates the (Min, Avg, Max) of the input stream query time (based on Platform Device)

- type MobilePhoneQueryReducer() =

- inherit ReducerBase()

- override self.Reduce (key:string) (values:seq<string>) =

- let initState = (TimeSpan.MaxValue, TimeSpan.MinValue, 0L, 0L)

- let (minValue, maxValue, totalValue, totalCount) =

- values |>

- Seq.fold (fun (minValue, maxValue, totalValue, totalCount) value ->

- (min minValue (TimeSpan.Parse(value)), max maxValue (TimeSpan.Parse(value)), totalValue + (int64)(TimeSpan.Parse(value).TotalSeconds), totalCount + 1L) ) initState

- Seq.singleton (key, box (minValue, TimeSpan.FromSeconds((float)(totalValue/totalCount)), maxValue))

MSDN.Hadoop.Submission.Console.exe -input "mobile/data" -output "mobile/querytimes"

-mapper "MSDN.Hadoop.MapReduceFSharp.MobilePhoneQueryMapper, MSDN.Hadoop.MapReduceFSharp"

-reducer "MSDN.Hadoop.MapReduceFSharp.MobilePhoneQueryReducer, MSDN.Hadoop.MapReduceFSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceFSharp\bin\Release\MSDN.Hadoop.MapReduceFSharp.dll"

F# Store XML (XML in Samples)

- namespace MSDN.Hadoop.MapReduceFSharp

- open System

- open System.Collections.Generic

- open System.Linq

- open System.IO

- open System.Text

- open System.Xml

- open System.Xml.Linq

- open MSDN.Hadoop.MapReduceBase

- // Extracts the QueryTime for each Platform Device

- type StoreXmlElementMapper() =

- inherit MapperBaseXml()

- override self.Map (element:XElement) =

- let aw = "https://schemas.microsoft.com/sqlserver/2004/07/adventure-works/StoreSurvey"

- let demographics = element.Element(XName.Get("Demographics")).Element(XName.Get("StoreSurvey", aw))

- seq {

- if not(demographics = null) then

- let business = demographics.Element(XName.Get("BusinessType", aw)).Value

- let sales = Decimal.Parse(demographics.Element(XName.Get("AnnualSales", aw)).Value) |> box

- yield (business, sales)

- }

- // Calculates the Total Revenue of the store demographics

- type StoreXmlElementReducer() =

- inherit ReducerBase()

- override self.Reduce (key:string) (values:seq<string>) =

- let totalRevenue =

- values |>

- Seq.fold (fun revenue value -> revenue + Int32.Parse(value)) 0

- Seq.singleton (key, box totalRevenue)

MSDN.Hadoop.Submission.Console.exe -input "stores/demographics" -output "stores/banking"

-mapper "MSDN.Hadoop.MapReduceFSharp.StoreXmlElementMapper, MSDN.Hadoop.MapReduceFSharp"

-reducer "MSDN.Hadoop.MapReduceFSharp.StoreXmlElementReducer, MSDN.Hadoop.MapReduceFSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceFSharp\bin\Release\MSDN.Hadoop.MapReduceFSharp.dll"

-nodename Store -format Xml

F# Binary Document (Word and PDF Documents)

- namespace MSDN.Hadoop.MapReduceFSharp

- open System

- open System.Collections.Generic

- open System.Linq

- open System.IO

- open System.Text

- open System.Xml

- open System.Xml.Linq

- open DocumentFormat.OpenXml

- open DocumentFormat.OpenXml.Packaging

- open DocumentFormat.OpenXml.Wordprocessing

- open iTextSharp.text

- open iTextSharp.text.pdf

- open MSDN.Hadoop.MapReduceBase

- // Calculates the pages per author for a Word document

- type OfficePageMapper() =

- inherit MapperBaseBinary()

- let (|WordDocument|PdfDocument|UnsupportedDocument|) extension =

- if String.Equals(extension, ".docx", StringComparison.InvariantCultureIgnoreCase) then

- WordDocument

- else if String.Equals(extension, ".pdf", StringComparison.InvariantCultureIgnoreCase) then

- PdfDocument

- else

- UnsupportedDocument

- let dc = XNamespace.Get("https://purl.org/dc/elements/1.1/")

- let cp = XNamespace.Get("https://schemas.openxmlformats.org/package/2006/metadata/core-properties")

- let unknownAuthor = "unknown author"

- let authorKey = "Author"

- let getAuthorsWord (document:WordprocessingDocument) =

- let coreFilePropertiesXDoc = XElement.Load(document.CoreFilePropertiesPart.GetStream())

- // Take the first dc:creator element and split based on a ";"

- let creators = coreFilePropertiesXDoc.Elements(dc + "creator")

- if Seq.isEmpty creators then

- [| unknownAuthor |]

- else

- let creator = (Seq.head creators).Value

- if String.IsNullOrWhiteSpace(creator) then

- [| unknownAuthor |]

- else

- creator.Split(';')

- let getPagesWord (document:WordprocessingDocument) =

- // return page count

- Int32.Parse(document.ExtendedFilePropertiesPart.Properties.Pages.Text)

- let getAuthorsPdf (document:PdfReader) =

- // For PDF documents perform the split on a ","

- if document.Info.ContainsKey(authorKey) then

- let creators = document.Info.[authorKey]

- if String.IsNullOrWhiteSpace(creators) then

- [| unknownAuthor |]

- else

- creators.Split(',')

- else

- [| unknownAuthor |]

- let getPagesPdf (document:PdfReader) =

- // return page count

- document.NumberOfPages

- // Map the data from input name/value to output name/value

- override self.Map (filename:string) (document:Stream) =

- let result =

- match Path.GetExtension(filename) with

- | WordDocument ->

- // Get access to the word processing document from the input stream

- use document = WordprocessingDocument.Open(document, false)

- // Process the word document with the mapper

- let pages = getPagesWord document

- let authors = (getAuthorsWord document)

- // close document

- document.Close()

- Some(pages, authors)

- | PdfDocument ->

- // Get access to the pdf processing document from the input stream

- let document = new PdfReader(document)

- // Process the pdf document with the mapper

- let pages = getPagesPdf document

- let authors = (getAuthorsPdf document)

- // close document

- document.Close()

- Some(pages, authors)

- | UnsupportedDocument ->

- None

- if result.IsSome then

- snd result.Value

- |> Seq.map (fun author -> (author, (box << fst) result.Value))

- else

- Seq.empty

- // Calculates the total pages per author

- type OfficePageReducer() =

- inherit ReducerBase()

- override self.Reduce (key:string) (values:seq<string>) =

- let totalPages =

- values |>

- Seq.fold (fun pages value -> pages + Int32.Parse(value)) 0

- Seq.singleton (key, box totalPages)

MSDN.Hadoop.Submission.Console.exe -input "office/documents" -output "office/authors"

-mapper "MSDN.Hadoop.MapReduceFSharp.OfficePageMapper, MSDN.Hadoop.MapReduceFSharp"

-reducer "MSDN.Hadoop.MapReduceFSharp.OfficePageReducer, MSDN.Hadoop.MapReduceFSharp"

-combiner "MSDN.Hadoop.MapReduceFSharp.OfficePageReducer, MSDN.Hadoop.MapReduceFSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceFSharp\bin\Release\MSDN.Hadoop.MapReduceFSharp.dll"

-file "C:\Reference Assemblies\itextsharp.dll" -format Binary

Optional Parameters

To support some additional Hadoop Streaming options a few optional parameters are supported.

-numberReducers X

As expected this specifies the maximum number of reducers to use.

-debug

The option turns on verbose mode and specifies a job configuration to keep failed task outputs.

To view the the supported options one can use a help parameters, displaying:

Command Arguments:

-input (Required=true) : Input Directory or Files

-output (Required=true) : Output Directory

-mapper (Required=true) : Mapper Class

-reducer (Required=true) : Reducer Class

-combiner (Required=false) : Combiner Class (Optional)

-format (Required=false) : Input Format |Text(Default)|Binary|Xml|

-numberReducers (Required=false) : Number of Reduce Tasks (Optional)

-file (Required=true) : Processing Files (Must include Map and Reduce Class files)

-nodename (Required=false) : XML Processing Nodename (Optional)

-debug (Required=false) : Turns on Debugging Options

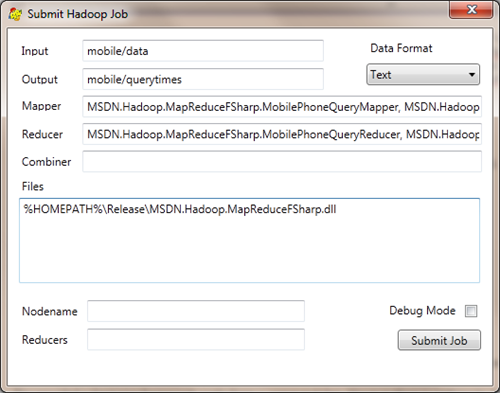

UI Submission

The provided submission framework works from a command-line. However there is nothing to stop one submitting the job using a UI; albeit a command console is opened. To this end I have put together a simple UI that supports submitting Hadoop jobs.

This simple UI supports all the necessary options for submitting jobs.

Code Download

As mentioned the actual Executables and Source code can be downloaded from:

https://code.msdn.microsoft.com/Framework-for-Composing-af656ef7

The source includes, not only the .Net submission framework, but also all necessary Java classes for supporting the Binary and XML job submissions. This relies on a custom Streaming JAR which should be copied to the Hadoop lib directory.

To use the code one just needs to reference the EXE’s in the Release directory. This folder also contains the MSDN.Hadoop.MapReduceBase.dll that contains the abstract base class definitions.

Moving Forward

Moving forward there a few considerations for the code, that I will be looking at over time:

Currently the abstract interfaces are all based on Object return types. Moving forward it would be beneficial if the types were based on Generics. This would allow a better serialization process. Currently value serialization is based on string representation of an objects value and the key is restricted to s string. Better serialization processes, such as Protocol Buffers, or .Net Serialization, would improve performance.

Currently the code only supports a single key value, although the multiple keys are supported by the streaming interface. Various options are available for dealing with multiple keys which will next be on my investigation list.

In a separate post I will cover what is actually happening under the covers.

Written by Carl Nolan