Avoid Bank Conflicts on tile_static Memory with C++ AMP

C++ AMP includes a performance optimization technology called tiling. There are three major ingredients for using tiling: scheduling thread tiles with tiled parallel_for_each, declaring variables with the tile_static storage class, and use tile_barrier to synchronize threads in a tile. A variable declared with tile_static is stored in a special memory that is visible to all threads in a tile. Compared with global memory, this memory has low latency and high bandwidth. In this blog post, I’m going to first talk about how tile_static memory is organized in GPU hardware, and then describe a performance issue called bank conflicts that you may hit while using tile_static memory, and then show techniques that can help avoid bank conflicts.

Organization of tile_static memory

On GPU hardware, tile_static variables reside at a small on-chip programmable/software managed cache, which has much higher bandwidth and lower latency than global memory. In C++ AMP, we refer such a cache as tile_static memory.

On some modern GPUs, tile_static memory consists of “n” equally sized memory banks which can be accessed simultaneously, and successive “m”-bit words are mapped to successive memory banks. The exact organization of tile_static memory (i.e. n and m) is hardware dependent. For example, on an Nvidia GTX 580 card or an ATI HD 5870 card, tile_static memory has 32 banks (n = 32) that are organized such that successive 32-bit words (m = 32) map to successive memory banks. Note that n might be different from hardware to hardware, m is usually 32. In the rest of the post, I will assume m is 32.

Bank Conflicts

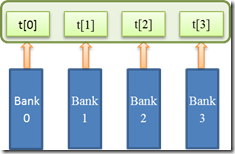

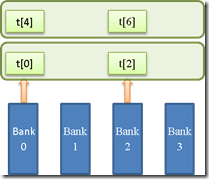

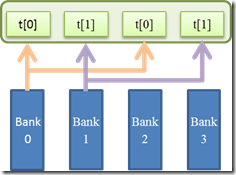

GPUs execute threads within a hardware scheduling unit in lock step. Let’s assume that the scheduling unit consists of “k” threads. k is hardware dependent, and usually, k equals to n or is a multiple of n. When the lock-step execution reaches a memory access to tile_static memory, if k equals to n, then one memory request consisting of requests from the k threads (assuming no divergence) is issued to tile_static memory. Otherwise k/n requests are issued. If an issued request consists of n 32-bit words that fall into n distinct memory banks, the request can be serviced with one memory transaction, thus fully utilize the bandwidth. If multiple words of the memory request fall in the same memory bank, there is a bank conflict. With conflicts, the accesses targeting to the same bank have to be serialized. As a result, the request has to be split into separate conflict-free memory transactions by hardware, thus not fully utilizing the bandwidth and decreasing the throughput. When a request needs to be split into x conflict-free transactions, we say that the request causes x-way bank conflicts. Assuming n = 4 and k = 4, the following figure shows a conflict-free case and a 2-way conflict case.

(Assume a 1D tiled parallel_for_each, tid is a 1-dimensional tiled_index, t is a tile_static array of type int)

… = t[tid.local[0]]

Conflict-free |

… = t[tid.local[0] * 2] 2-way bank conflicts |

For some recent hardware, a memory read request from a scheduling unit consisting of access to the same 32-bit word (from different threads in the unit) does not cause a bank conflict. This is called broadcasting. The hardware is able to broadcast the requested word to the requesting threads.

… = t[tid.local[0] % 2] Conflict-free with broadcasting |

Note if multiple threads in the scheduling unit try to write to the same word, it is a race. Depending on hardware, it may or may not cause bank conflict. In any case, which thread performs the write is undefined.

Whether an access pattern causes a bank conflict is hardware dependent. To identify bank conflicts that your code may introduce, you need to find out the following parameters from your hardware vendor:

- The number of memory banks n for tile_static memory.

- The number m for “m”-bit words that are mapped to successive memory banks.

- The number of threads k of a hardware scheduling unit.

- The broadcasting capability of the hardware.

Though these parameters are hardware dependent, for recent generations of GPUs, they are not very diversified. It is common that n is 16 or 32, and k is 32 or 64. Taking NVidia GTX 580 as an example, its tile_static memory has 32 memory banks, and each hardware scheduling unit (also referred as warp) has 32 threads. Therefore, it’s capable of issuing one memory request to tile_static memory for all threads in the unit. GTX 580 also offers the broadcasting mechanism such that multiple reads of the same 32-bit word from different threads does not cause a bank conflict.

Avoid bank conflicts

If consecutive threads access the tile_static memory with m-bit unit stride, you generally won’t get bank conflicts. In this section, I will use two examples that does not have unit stride accesses to show you how to identify bank conflicts and the avoidance techniques. I will use matrix transpose to show how to use memory padding to avoid bank conflicts for accessing multi-dimensional data, and use parallel reduction to show how to change the way of addressing to avoid bank conflicts on one dimensional data.

Before diving into the examples, I want to emphasize two things. First, as I have explained, whether and how a bank conflict exists is hardware dependent. The conflict-avoidance technique is thus also hardware dependent, and therefore may not be portable. Having said that, please keep in mind that the hardware parameter n and k described in previous section are not greatly diversified. So there is still a good chance for the code with conflict avoidance being portable. Second, bank conflicts may not be a major performance issue for an application on a specific hardware, if there are other more dominant issues. Therefore, for such code, just addressing the bank-conflict issue may not exhibit significant (or any) performance improvements.

In this post, the performance tuning for the two examples are done on an Nvidia GTX 580 card. Therefore, I will assume the hardware parameters of GTX 580 in this section. The performance tuning is based on the measurement of parallel_for_each execution time, thus excluding time for copy etc. I will assume that you have read our blog posts on how to measure the performance of C++ AMP algorithms, and the need for data warm-up when measuring.

Example 1: use memory padding for matrix transpose

Let’s look at the matrix transpose sample. Among multiple versions of transpose implemented in the sample, I will only dive into the one called transpose_tiled_even. Let’s take a look at this simple kernel:

parallel_for_each(data.extent.tile<_tile_size, _tile_size>(),

[=] (tiled_index<_tile_size, _tile_size> tidx) restrict(amp)

{

tile_static _value_type t1[_tile_size][_tile_size];

t1[tidx.local[1]][tidx.local[0]] = data[tidx.global];

tidx.barrier.wait();

index<2> idxdst(transpose(tidx.tile_origin) + tidx.local);

data_transpose[idxdst] = t1[tidx.local[0]][tidx.local[1]];

});

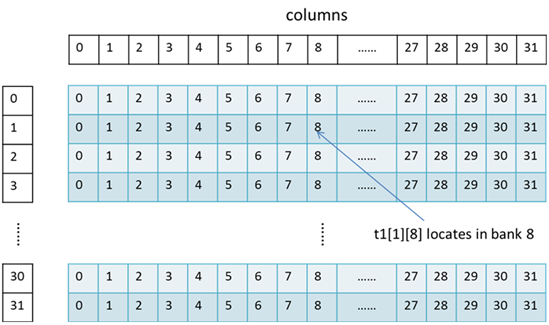

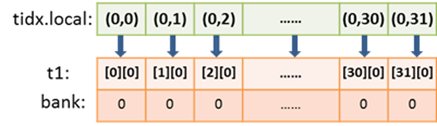

Assume that I use _tile_size of 32, and _value_type as float, each tile is two dimensional and has 32 x 32 threads, and also has a tile_static 2D array t1 of type float (thus 32-bit per element).Now take a careful look on the highlighted line where each thread writes into t1. First, the figure below shows how t1[32][32] is laid out in memory in terms of memory banks:

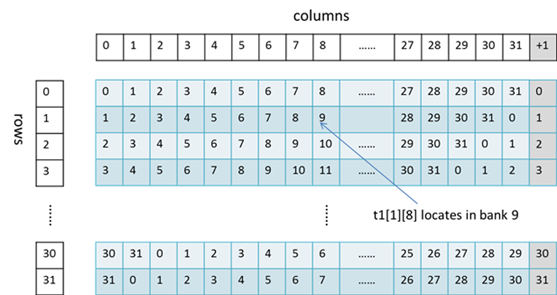

A t1[i][j] maps to a location of 32 * i + j in a linearized memory layout. You can see that it goes to memory bank (32 * i + j) % 32 = j (since j is between [0, 31]). So that references that differ only in the “i" index are in the same memory bank.

Now take the first scheduling unit with tidx.local (0, 0) to (0, 31), let’s see how they access “t1”:

The memory write request from the first scheduling unit needs to access just a single memory bank and causes a 32-way bank conflicts. It takes 32 memory transactions to satisfy this request, which means it only utilizes 1/32 of the bandwidth. What causes the bank conflicts is that the number of memory banks is the same as the number of columns of t1. Thus each column of t1 is located in the same memory bank. Since all the threads within a scheduling unit try to write to the same column of t1, thus the bank conflicts occur.

How do you avoid such bank conflicts? It is simple: we use a technique called memory padding. We could pad the row size of t1 with one more element as:

tile_static _value_type t1[_tile_size][_tile_size + 1];

By doing so, we can ensure that t1’s elements in the same column in successive rows are located on different banks, as shown in figure below:

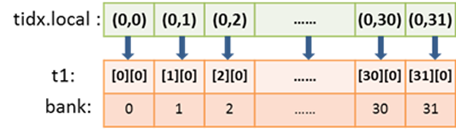

Now A t1[i][j] maps to a location of 33 * i + j in a linearized memory layout. You can see that it should go to memory bank (33 * i + j) % 32 = (32 * i + i + j) % 32 = (i +j ) % 32. In this example, j is the same for threads in a scheduling unit. So when i’s are different, different memory banks are accessed. From the figure above, you can tell that the bank conflicts have been completely eliminated as shown below.

I used the transpose_tiled_even function from the sample to transpose an 8192 x 8192 matrix with 32 x 32 tiling. By measuring the elapsed time of the parallel_for_each on GTX 580 (driver: 8.17.13.142), I observed:

- Before avoiding bank conflicts: 13.05 milliseconds

- After avoiding bank conflicts: 7.60 milliseconds

So on this card with these specified parameters, I can see a ~40% improvement on kernel execution time.

Padding is a common way to avoid bank conflicts when there is a need for consecutive threads to access multi-dimensional (e.g. 2D) data along the non-unit-stride dimension. However, padding also increases the tile_static memory consumption for each tile. Therefore, the occupancy rate may be reduced. When applying it, you must watch out for any adverse effects on overall performance.

In the above analysis, I used 32 x 32 tiling. Now, if I use 16 x 16 tiling, there will be an x-way conflict. What’s the value of x? How can padding be used to avoid or reduce bank conflicts? These questions are left as an exercise for you dear reader ;-).

Example 2: use sequential addressing for parallel reduction

Now let’s look at the parallel reduction sample. Among multiple versions of reduction implemented in the sample, I will first analyze version reduction_tiled_2 to show you the bank conflicts, and then show how version reduction_tiled_3 avoids bank conflicts. Let’s first take a look on the code of the loop that accesses a tile_static array in reduction_tiled_2:

tile_static float tile_data[_tile_size];

// some code

for(unsigned s = 1; s < _tile_size; s *= 2)

{

unsigned index = 2 * s * local_idx;

if (index < _tile_size)

{

tile_data[index] += tile_data[index + s];

}

tidx.barrier.wait();

}

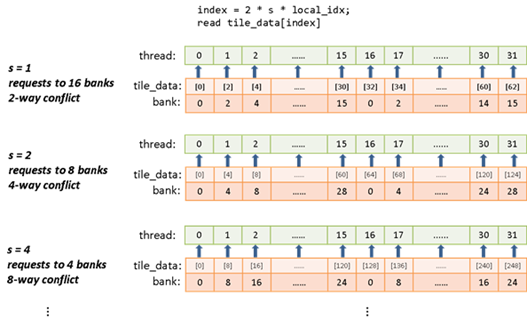

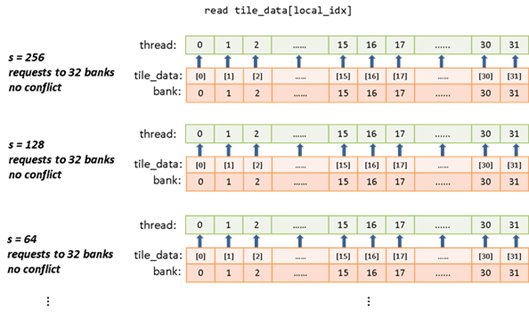

Assume _tile_size is 512, and local_idx is the thread index within a tile. The highlighted line of code contains a read from tile_data[index] , a read from tile_data[index + s] , and a write to tile_data[index] . For the read from tile_data[index] , the figure below illustrates the memory accesses from threads 0 ~ 31 in the first scheduling unit.

Similar bank conflict analysis also applies to the read from tile_data[index + s] , and the write to tile_data[index] .

In the code, the bank conflicts are due to successive threads addressing elements in the tile_data array with an s * 2 stride (interleaving addressing). To avoid the bank conflicts, we need to change the way of addressing, such that successive threads could access successive elements in tile_data array (sequential addressing). reduction_tiled_3 in the sample implements such addressing.

for(unsigned s = _tile_size / 2; s > 0; s /= 2)

{

if (local_idx < s)

{

tile_data[local_idx] += tile_data[local_idx + s];

}

tidx.barrier.wait();

}

Let’s illustrate how the read from tile_data[local_idx] is performed with the code above:

Note that the index that used to access is always the thread index in the tile. Therefore, the stride keeps being 1, regardless of the change of s. As a result, there is no bank conflict with such sequential addressing. Similarly, there is no bank conflict for reading from tile_data[local_idx + s] and writing to tile_data[local_idx] . For accessing 1D data in tile_static memory, to avoid bank conflict, it’s important to use sequential addressing. Generally, it is important to avoid strides that have multiples of 2 in them since the number of banks is almost always a power of 2.

I used the sample to reduce 16,777,216 floats with _tile_size as 512. On GTX 580 (driver: 8.17.13.142), by measuring the execution time of the while loop that launches multiple parallel_for_each’s, I got:

- reduction_tiled_2: 5.21 milliseconds

- reduction_tiled_3: 4.14 milliseconds

So on this card with the specified parameters, I can see a ~20% improvement on kernel execution time.

Finally it is worth mentioning another cause of bank conflicts that not shown by the two examples in this blog post. The array of structs vs. struct of arrays issue also applies to tile_static memory. The use of array of structs can sometimes leads to bank conflicts. When the size of the struct (in 32-bit words) is a multiple of 2, there will be bank conflicts. It could be resolved by either using struct of arrays or padding the struct. Keep this in mind when you explore bank conflicts avoidance for your code.

In Closing

In this post, I talked about the organization of tile_static memory on GPUs, explained what bank conflict is, and used two examples to demonstrate how bank conflicts are caused and how to avoid them. As usual, questions and comments are welcome below or in our MSDN forum.