Windows Azure Security Best Practices – Part 7: Tips, Tools, Coding Best Practices

While writing the series of posts, I kept running into more best practices. So here are a few more items you should consider in securing your Windows Azure application.

While writing the series of posts, I kept running into more best practices. So here are a few more items you should consider in securing your Windows Azure application.

Here are some tools, coding tips, and best practices:

- Running on the Operating System

- Getting the latest security patches

- If you can, run in partial trust

- Error handling

- How to implement your retry logic

- Logging errors in Windows Azure

- Access to Azure Storage

- Access to Blobs

- Storing your connection string

- Gatekeepers patterns

- Rotating your storage keys

- Crypto services for your data security

Running on the Operating System

Get the Latest Security Patches

When creating a new application with Visual Studio the default behavior is to set the Guest OS version like this in the ServiceConfiguration.cscfg file:

osFamily="1" osVersion="*"

This is good because you will get automatic updates, which is one of the key benefits of PaaS. It is less than optimal because you are not using the latest OS. In order to use the latest OS version (Windows Server 2008 R2), the setting should look like this:

osFamily="2" osVersion="*"

Many customers unfortunately decide to lock to a particular version of the OS in the hopes of increasing uptime by avoiding guest OS updates. This is only a reasonable strategy for enterprise customers that systematically tests each update in staging and then schedules a VIP Swap to their mission critical application running in production. For everyone else that does not test each guest OS update, not configuring automatic updates is putting your Windows Azure application at risk.

-- from Troubleshooting Best Practices for Developing Windows Azure Applications

If You Can, Run in Partial Trust

By default, roles deployed to Windows Azure run under full trust. You need full trust if you are invoking non-.NET Code or using .NET libraries that require full trust or anything that requires admin rights. Restricting your code to run in partial trust means that anyone who might have access to your code is more limited to what they can do.

If your web application gets compromised in some way, using partial trust will limit your attacker in the amount of damage he can do. For example, a malicious attacker couldn’t modify any of your ASP.NET pages on disk by default, or change any of the system binaries.

Because the user account is not an administrator on the virtual machine, using partial trust adds even further restrictions than those imposed by Windows. This trust level is enforced by .NET’s Code Access Security (CAS) support.

Partial trust is similar to the “medium trust” level in .NET. Access is granted only to certain resources and operations. In general, your code is allowed only to connect to external IP addresses over TCP, and is limited to accessing files and folders only in its “local store,” as opposed to any location on the system. Any libraries that your code uses must either work in partial trust or be specially marked with an “allow partially trusted callers” attribute.

You can explicitly configure the trust level for a role within the service definition file. The service definition schema provides an enableNativeCodeExecution attribute on the WebRole element and the WorkerRole element. To run your role under partial trust, you must add the enableNativeCodeExecution attribute on the WebRole or WorkerRole element and set it to false.

But partial trust does restrict what your application can do. Several useful libraries (such as those used for accessing the registry or accessing a well-known file location) don’t work in such an environment. r trivial reasons. Even some of Microsoft’s own frameworks don’t work in this environment because they don’t have the “partially trusted caller” attribute set.

See Windows Azure Partial Trust Policy Reference for information about what you get when you run in partial trust.

Handling Errors

Windows Azure automatically heals itself, but can your application?

Retry Logic

Transient faults are errors that occur because of some temporary condition such as network connectivity issues or service unavailability. Typically, if you retry the operation that resulted in a transient error a short time later, you find that the error has disappeared.

Different services can have different transient faults, and different applications require different fault handling strategies.

While it may not appear to be security related, it is a best practice build retry logic into your application.

Azure Storage

The Windows Azure Storage Client Library that ships with the SDK already has retry behavior that you need to switch on. You can set this on any storage client by setting the RetryPolicy Property.

SQL, Service Bus, Cache, and Azure Storage

But SQL Azure doesn’t provide a default retry mechanism out of the box, since it uses the SQL Server client libraries. Neither does Service Bus also doesn’t provide a retry mechanism.

So the Microsoft patterns & practices team and the Windows Azure Customer Advisory Team developed a The Transient Fault Handling Application Block. The block provides a number of ways to handle specific SQL Azure, Storage, Service Bus and Cache conditions.

The Transient Fault Handling Application Block encapsulates information about the transient faults that can occur when you use the following Windows Azure services in your application:

- SQL Azure

- Windows Azure Service Bus

- Windows Azure Storage

- Windows Azure Caching Service

The block now includes enhanced configuration support, enhanced support for wrapping asynchronous calls, provides integration of the block's retry strategies with the Windows Azure Storage retry mechanism, and works with the Enterprise Library dependency injection container.

Catch Your Errors

Unfortunately systems fail. And Windows Azure is built to fail. And even with retry logic, you will occasionally experience a failure. You can add your own custom error handling to your ASP.NET Web applications. Custom error handling can ease debugging and improve customer satisfaction.

Eli Robillard, a member of the Microsoft MVP program, shows how you can create an error-handling mechanism that shows a friendly face to customers and still provides the detailed technical information developers will need in his article Rich Custom Error Handling with ASP.NET.

If an error page is displayed, it should serve both developers and end-users without sacrificing aesthetics. An ideal error page maintains the look and feel of the site, offers the ability to provide detailed errors to internal developers—identified by IP address—and at the same time offers no detail to end users. Instead, it gets them back to what they were seeking—easily and without confusion. The site administrator should be able to review errors encountered either by e-mail or in the server logs, and optionally be able to receive feedback from users who run into trouble.

Logging Errors in Windows Azure

ELMAH (Error Logging Modules and Handlers) itself is extremely useful, and with a few simple modifications can provide a very effective way to handle application-wide error logging for your ASP.NET web applications. My colleague Wade Wegner describes the steps he recommends in his post Using ELMAH in Windows Azure with Table Storage.

Once ELMAH has been dropped into a running web application and configured appropriately, you get the following facilities without changing a single line of your code:

- Logging of nearly all unhandled exceptions.

- A web page to remotely view the entire log of recoded exceptions.

- A web page to remotely view the full details of any one logged exception, including colored stack traces.

- In many cases, you can review the original yellow screen of death that ASP.NET generated for a given exception, even with

customErrorsmode turned off. - An e-mail notification of each error at the time it occurs.

- An RSS feed of the last 15 errors from the log.

To learn more about ELMAH, see the MSDN article Using HTTP Modules and Handlers to Create Pluggable ASP.NET Components by Scott Mitchell and Atif Aziz. And see the ELMAH project page.

Accessing Your Errors Remotely

There are a number of scenarios where it is useful to have the ability to manage your Windows Azure storage accounts remotely. For example, during development and testing, you might want to be able to examine the contents of your tables, queues, and blobs to verify that your application is behaving as expected. You may also need to insert test data directly into your storage.

In a production environment, you may need to examine the contents of your application's storage during troubleshooting or view diagnostic data that you have persisted. You may also want to download your diagnostic data for offline analysis and to be able to delete stored log files to reduce your storage costs.

A web search will reveal a growing number of third-party tools that can fulfill these roles. See Windows Azure Storage Management Tools for some useful tools.

Access to Storage

Keys

One thing to note right away is that no application should ever use any of the keys provided by Windows Azure as keys to encrypt data. An example would be the keys provided by Windows Azure for the storage service. These keys are configured to allow for easy rotation for security purposes or if they are compromised for any reason. In other words, they may not be there in the future, and may be too widely distributed.

Rotate Your Keys

When you create a storage account, your account is assigned two 256-bit account keys. One of these two keys must be specified in a header that is part of the HTTP(S) request. Having two keys allows for key rotation in order to maintain good security on your data. Typically, your applications would use one of the keys to access your data. Then, after a period of time (determined by you), you have your applications switch over to using the second key. Once you know your applications are using the second key, you retire the first key and then generate a new key. Using the two keys this way allows your applications access to the data without incurring any downtime.

See How to View, Copy, and Regenerate Access Keys for a Windows Azure Storage Account to learn how to view and copy access keys for a Windows Azure storage account, and to perform a rolling regeneration of the primary and secondary access keys.

Restricting Access to Blobs

By default, a storage container and any blobs within it may be accessed only by the owner of the storage account. If you want to give anonymous users read permissions to a container and its blobs, you can set the container permissions to allow public access. Anonymous users can read blobs within a publicly accessible container without authenticating the request. See Restricting Access to Containers and Blobs.

A Shared Access Signature is a URL that grants access rights to containers and blobs. A Shared Access Signature grants access to the Blob service resources specified by the URL's granted permissions. Care must be taken when using Shared Access Signatures in certain scenarios with HTTP requests, since HTTP requests disclose the full URL in clear text over the Internet.

By specifying a Shared Access Signature, you can grant users who have the URL access to a specific blob or to any blob within a specified container for a specified period of time. You can also specify what operations can be performed on a blob that's accessed via a Shared Access Signature. Supported operations include:

- Reading and writing blob content, block lists, properties, and metadata

- Deleting a blob

- Leasing a blob

- Creating a snapshot of a blob

- Listing the blobs within a container

Both block blobs and page blobs support Shared Access Signatures.

If a Shared Access Signature has rights that are not intended for the general public, then its access policy should be constructed with the least rights necessary. In addition, a Shared Access Signature should be distributed securely to intended users using HTTPS communication, should be associated with a container-level access policy for the purpose of revocation, and should specify the shortest possible lifetime for the signature.

See Creating a Shared Access Signature and Using a Shared Access Signature (REST API).

Storing the Connection String

If you have a hosted service that uses the Windows Azure Storage Client library to access your Windows Azure Storage account it is recommended that you store your connection string in the service configuration file. Storing the connection string in the service configuration file allows a deployed service to respond to changes in the configuration without redeploying the application.

Examples of when this is beneficial are:

- Testing – If you use a test account while you have your application deployed to the staging environment and must switch it over to the live account when your move the application to the production environment.

- Security – If you must rotate the keys for your storage account due to the key in use being compromised.

For more information on configuring the connection string, see Configuring Connection Strings.

For more information about using the connection strings, see Reading Configuration Settings for the Storage Client Library and Handling Changed Settings.

Gatekeeper Design Pattern

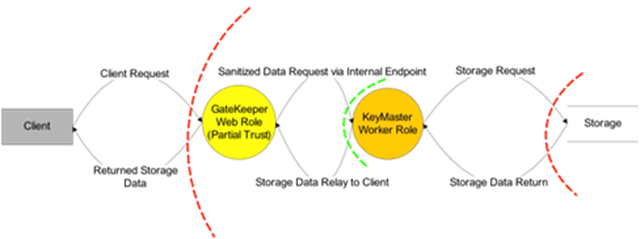

A Gatekeeper is a design pattern in which access to storage is brokered so as to minimize the attack surface of privileged roles by limiting their interaction to communication over private internal channels and only to other web/worker roles.

The pattern is explained in the paper Security Best Practices For Developing Windows Azure Applications from Microsoft Download.

These roles are deployed on separate VMs.

In the event of a successful attack on a web role, privileged key material is not compromised. The pattern can best be illustrated by the following example which uses two roles:

- The GateKeeper – A Web role that services requests from the Internet. Since these requests are potentially malicious, the Gatekeeper is not trusted with any duties other than validating the input it receives. The GateKeeper is implemented in managed code and runs with Windows Azure Partial Trust. The service configuration settings for this role do not contain any Shared Key information for use with Windows Azure Storage.

- The KeyMaster – A privileged backend worker role that only takes inputs from the Gatekeeper and does so over a secured channel (an internal endpoint, or queue storage – either of which can be secured with HTTPS). The KeyMaster handles storage requests fed to it by the GateKeeper, and assumes that the requests have been sanitized to some degree. The KeyMaster, as the name implies, is configured with Windows Azure Storage account information from the service configuration to enable retrieval of data from Blob or Table storage. Data can then be relayed back to the requesting client. Nothing about this design requires Full Trust or Native Code, but it offers the flexibility of running the KeyMaster in a higher privilege level if necessary.

Multiple Keys

In scenarios where a partial-trust Gatekeeper cannot be placed in front of a full-trust role, a multi-key design pattern can be used to protect trusted storage data. An example case of this scenario might be when a PHP web role is acting as a front-end web role, and placing a partial trust Gatekeeper in front of it may degrade performance to an unacceptable level.

The multi-key design pattern has some advantages over the Gatekeeper/KeyMaster pattern:

· Providing separation of duty for storage accounts. In the event of Web Role A’s compromise; only the untrusted storage account and associated key are lost.

· No internal service endpoints need to be specified. Multiple storage accounts are used instead.

· Windows Azure Partial Trust is not required for the externally-facing untrusted web role. Since PHP does not support partial trust, the Gatekeeper configuration is not an option for PHP hosting.

See Security Best Practices For Developing Windows Azure Applications from Microsoft Download.

Cyptro Services

You can use encryption to help securing application-layer data. Cryptographic Service Providers (CSPs) are implementations of cryptographic standards, algorithms and functions presented in a system program interface.

An excellent article that will provide you insight into how you can provide these kinds of services in your application is by Jonathan Wiggs in his MSDN Magazine article, Crypto Services and Data Security in Windows Azure. He explains, “A consistent recommendation is to never create your own or use a proprietary encryption algorithm. The algorithms provided in the .NET CSPs are proven, tested and have many years of exposure to back them up.”

There are many you can choose from. Microsoft provides:

Microsoft Base Cryptographic Provider. A broad set of basic cryptographic functionality that can be exported to other countries or regions.

Microsoft Strong Cryptographic Provider. An extension of the Microsoft Base Cryptographic Provider available with Windows 2000 and later.

Microsoft Enhanced Cryptographic Provider. Microsoft Base Cryptographic Provider with through longer keys and additional algorithms.

Microsoft AES Cryptographic Provider. Microsoft Enhanced Cryptographic Provider with support for AES encryption algorithms.

Microsoft DSS Cryptographic Provider. Provides hashing, data signing, and signature verification capability using the Secure Hash Algorithm (SHA) and Digital Signature Standard (DSS) algorithms.

Microsoft Base DSS and Diffie-Hellman Cryptographic Provider . superset of the DSS Cryptographic Provider that also supports Diffie-Hellman key exchange, hashing, data signing, and signature verification using the Secure Hash Algorithm (SHA) and Digital Signature Standard (DSS) algorithms.

Microsoft Enhanced DSS and Diffie-Hellman Cryptographic Provider. Supports Diffie-Hellman key exchange (a 40-bit DES derivative), SHA hashing, DSS data signing, and DSS signature verification.

Microsoft DSS and Diffie-Hellman/Schannel Cryptographic Provider. Supports hashing, data signing with DSS, generating Diffie-Hellman (D-H) keys, exchanging D-H keys, and exporting a D-H key. This CSP supports key derivation for the SSL3 and TLS1 protocols.

Microsoft RSA/Schannel Cryptographic Provider. Supports hashing, data signing, and signature verification. The algorithm identifier CALG_SSL3_SHAMD5 is used for SSL 3.0 and TLS 1.0 client authentication. This CSP supports key derivation for the SSL2, PCT1, SSL3 and TLS1 protocols.

Microsoft RSA Signature Cryptographic Provider. Provides data signing and signature verification.

Key Storage

The data security provided by encrypting data is only as secure as the keys used, and this problem is much more difficult than people may think at first. You should not use your Azure Storage keys. Instead, you can create your own using the providers in the previous section.

Storing your own key library within the Windows Azure Storage services is a good way to persist some secret information since you can rely on this data being secure in the multi-tenant environment and secured by your own storage keys. This is different from using storage keys as your cryptography keys. Instead, you could use the storage service keys to access a key library as you would any other stored file.

Key Management

To start, always assume that the processes you’re using to decrypt, encrypt and secure data are well-known to any attacker. With that in mind, make sure you cycle your keys on a regular basis and keep them secure. Give them only to the people who must make use of them and restrict your exposure to keys getting outside of your control.

Cleanup

And using while you are using keys, it is recommended that such data be stored in buffers such as byte arrays. That way, as soon as you’re done with the information, you can overwrite the buffer with zeroes or any other data that ensures the data is no longer in that memory.

Again, Jonathan’s article Crypto Services and Data Security in Windows Azure is a great place to study and learn how all the pieces fit together.

Summary

Security is not something that can be added on as the last step in your development process. Rather it should be make part of your ongoing development process. And you should make security decisions based on the needs of your own application.

Have a methodology where every part of your application development cycle considers security. Look for places in your architecture and code where someone might have access to your data.

Windows Azure makes security a shared responsibility. With Platform as a Service, you can focus on your application and your own security needs in deeper ways that before.

In a series of blog posts, I provided you a look into how you can secure your application in Windows Azure. This seven-part series described the threats, how you can respond, what processes you can put into place for the lifecycle of your application, and prescribed a way for you to implement best practices around the requirements of your application.

I also showed ways for you to incorporate user identity and some of services Azure provides that will enable your users to access your cloud applications in new says.

Here are links to the articles in this series:

- Windows Azure Security Best Practices -- Part 1: The Challenges, Defense in Depth.

- Windows Azure Security Best Practices -- Part 2: What Azure Provides Out-of-the-Box.

- Windows Azure Security Best Practices – Part 3: Identifying Your Security Frame.

- Windows Azure Security Best Practices – Part 4: What Else You Need to Do.

- Windows Azure Security Best Practices – Part 5: Claims-Based Identity, Single Sign On.

- Windows Azure Security Best Practices – Part 6: How Azure Services Extends Your App Security.

- Windows Azure Security Best Practices – Part 7: Tips, Tools, Coding Best Practices.

Bruce D. KyleISV Architect Evangelist | Microsoft Corporation